AWS Backup Security Best Practices

AWS offers several cloud-based products for computing, storage, analytics, etc. Two of these products are often used by organizations for backup storage: Amazon S3 for object storage in the cloud and Amazon Elastic Block Store (EBS) for storing EC2 volumes and their backups.

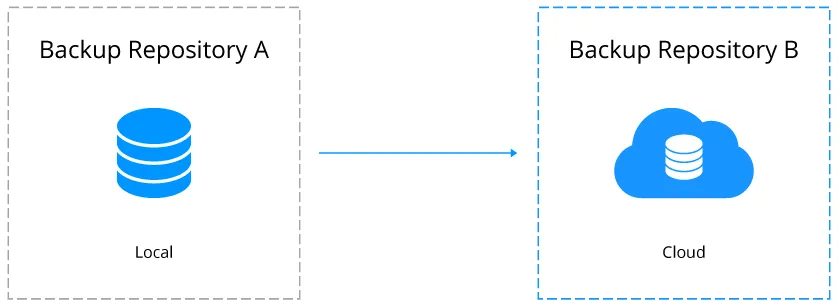

Cloud storage platforms are ideal for implementing the 3-2-1 data protection approach. AWS is also an excellent choice for both storage and compute resources as it delivers exceptional availability and resilience using geo-redundancy across different geographical regions. You do not have to worry about a disaster striking your area and compromising the data center; copies of your data are spread around the globe.

However, with new cyber threats rapidly evolving, it is important to understand how you can implement the AWS security best practices for cloud backups to reduce the potential risks on your organization’s end, as the majority of security incidents in the cloud occur through the fault of the customer, not the cloud provider. Read this post to learn more about all the security options for different AWS storage products and how to benefit from them.

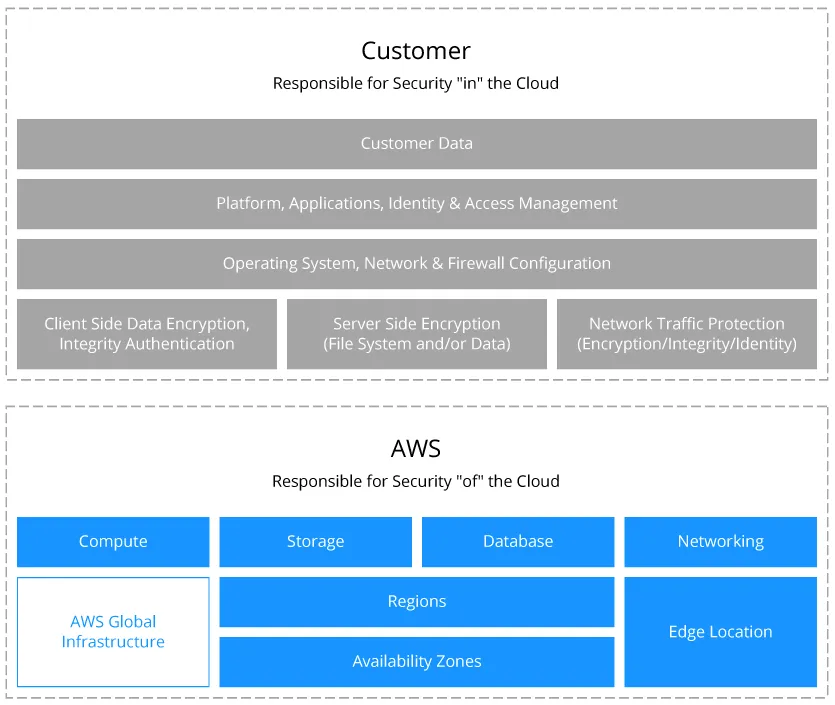

AWS Shared Responsibility Model

Whether using the AWS cloud storage options or migrating the entire infrastructure to Amazon EC2, you should know the AWS shared responsibility model to understand who is responsible for AWS backup security. Some of the responsibility falls on the cloud provider, particularly for the security and availability of the cloud platform. However, protecting against many of the other threats to your workloads and data still falls under your responsibility, that is the customer.

As is the case with most cloud providers, responsibility for data security is shared between AWS and the cloud customer. As the cloud provider, Amazon assumes responsibility for the security of the AWS infrastructure. Security of the platform is critical for protecting customers’ critical data and applications. AWS detects instances of fraud and abuse, notifying its customers of the incidents.

Meanwhile, customers are responsible for the security configurations of the products they use in AWS. They must make sure that access to sensitive data from inside or outside the organization is properly restricted and that they apply the recommended data protection policies.

AWS Backup Storage Options

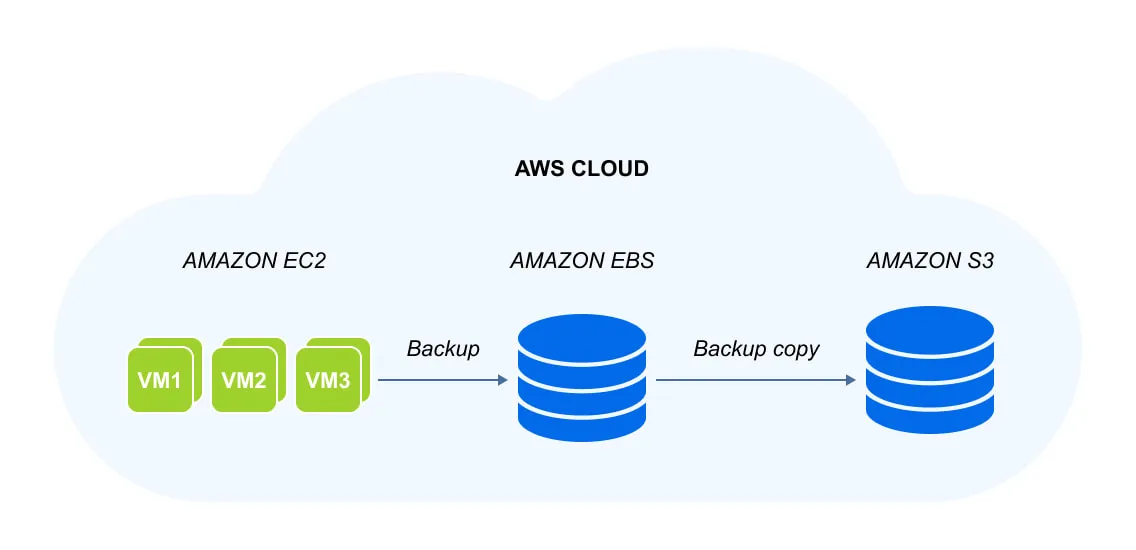

AWS offers 2 main cloud platforms: Amazon S3 and Amazon EC2 for different use cases. The Amazon EC2 compute product relies on the Elastic Block Store (EBS) storage platform.

- Amazon Simple Storage Service or Amazon S3 is an object storage platform designed to store data from any source – for instance, web or mobile applications, websites, or data from IoT (Internet of things) sensors. It is also a very popular option as a target for backups and backup copies of virtual machines and Amazon EC2 instances.

- Amazon Elastic Block Store (EBS) is designed by Amazon to provide persistent block storage volumes for workloads in Amazon Elastic Cloud (Amazon EC2).

The two backup storage options can often be combined in a backup strategy for Amazon EC2 instances: You can send backups of instances to Amazon EBS and create a backup copy to be stored in Amazon S3 for added reliability.

AWS Cloud Security Best Practices for Backups

While there are a wide variety of AWS services, the primary ones are Elastic Compute Cloud (EC2), Amazon S3, and Amazon Virtual Private Cloud (VPC), an isolated private cloud hosted within a public cloud.

As mentioned, the Shared Responsibility Model assigns the customer full responsibility for configuring the security controls. To ensure your data in AWS stays intact and protected, follow the best practices in 5 key areas:

- Security Monitoring

- Account Security

- Security Configuration

- Inactive Entities Management

- Access Restrictions

Consider also implementing AWS security best practices for each particular Amazon service:

- Amazon S3

- Amazon EC2

- Amazon VPC

Security Monitoring

Security monitoring is one of the main AWS security best practices because it allows you to detect suspicious events in due time and proactively fix issues related to data protection.

- Enabling CloudTrail. The CloudTrail service generates logs for all Amazon web services, including those that are not region-specific, such as IAM, CloudFront, etc.

- Using CloudTrail log file validation. This feature serves as an additional layer of protection for the integrity of the log files. With log file validation turned on, any changes made to the log file after delivery to the Amazon S3 bucket are traceable.

- Enabling CloudTrail multi-region logging. CloudTrail provides AWS API call history, which allows security analysts to track changes in the AWS environment, audit compliance, investigate incidents, and make sure that security best practices in AWS are followed. By enabling CloudTrail in all regions, organizations can detect unexpected or suspicious activity in otherwise unused regions.

- Integrating CloudTrail service with CloudWatch. The CloudWatch component offers continuous monitoring of log files from EC2 instances, CloudTrail, and other sources. CloudWatch can also collect and track metrics to help you detect threats quickly. This integration facilitates real-time and historic activity logging in relation to the user, API, resource, and IP address. You can set up alarms and notifications for unusual or suspicious account activity.

- Enabling access logging for CloudTrail S3 buckets. This feature is designed to prevent deeper penetration into CloudTrail S3 buckets by attackers. These logs contain the log data that is captured by CloudTrail, which is used for monitoring activity and incident investigations. Keep access logging for CloudTrail S3 buckets enabled. This allows you to track access requests and detect unauthorized access attempts quickly.

- Enabling access logging for Elastic Load Balancer (ELB). Enabling ELB access logging allows the ELB to record and save information about each TCP or HTTP request. This data can be extremely useful for security and troubleshooting professionals. For instance, your ELB logging data can be useful when analyzing traffic patterns that may be indicative of certain types of attacks.

- Enabling Redshift audit logging. Amazon Redshift is an AWS service that logs details about user activities such as queries and connections made in the database. By enabling Redshift, you can perform audits and support post-incident forensic investigations for a given database.

- Enabling Virtual Private Cloud (VPC) flow logging. VPC flow logging is a network monitoring service that introduces visibility into VPC network traffic. This feature can be used for detecting unusual or suspicious traffic, giving security insights, and alerting you of any anomalous activities. Enabling VPC allows you to identify security and access issues such as unusual volumes of data transfer, rejected connection requests, overly permissive security groups or network Access Control Lists (ACLs), etc.

AWS Account Security Best Practices

It is important to protect user accounts to make sure that they cannot be easily compromised. To do this, follow the AWS account security best practices below:

- Multifactor authentication (MFA) for deleting CloudTrail S3 buckets. If your AWS account is compromised, the first step an attacker would likely take is deleting CloudTrail logs to cover their intrusion and delay detection. Setting up MFA for deleting S3 buckets with CloudTrail logs makes log deletion much harder for a hacker, thus reducing their chances of going unnoticed.

- MFA for the root account. The first user account created when signing up for AWS is called the root account. The root account is the most privileged user type, with access to every AWS resource. This is why you should enable MFA for the root account as soon as possible. One of the AWS security best practices for root account MFA is avoiding attaching the credentials to a user’s personal device. For this purpose, you should have a dedicated mobile device to be stored in a remote location. This introduces an additional layer of protection and ensures that the root account is always accessible, regardless of whose personal devices are lost or broken.

- MFA for IAM users. If your account is compromised, MFA becomes the last line of defense. All users with a console password for the Identity and Access Management (IAM) service should be required to go through MFA.

- Multi-mode access for IAM users. Enabling multi-mode access for IAM users allows you to split users into two groups: application users with API access and administrators with console access. This reduces the risk of unauthorized access if IAM user credentials (access keys or passwords) are compromised.

- IAM policies assigned to groups or roles. Do not assign policies and permissions to users directly. Instead, provision users’ permissions at the group and role level. This approach makes managing permissions simpler and more convenient. You also reduce the risk that an individual user receives excessive permissions or privileges by accident.

- Rotation of IAM access keys on a regular basis. The more often you rotate access key pairs, the less likely your data can be improperly accessed with a lost or stolen key.

- Strict password policy. Unsurprisingly, users tend to create overly simple passwords. This is because they want something that is easy for them to remember. However, such passwords are often also easy for someone to guess. Implementing and maintaining a strict password policy is another AWS security best practice for protecting accounts from brute force login attempts. The policy details may differ, but you should require passwords to have at least one upper-case letter, one lower-case letter, one number, one symbol, and a minimum length of 14 characters.

Configuring Security Best Practices in AWS

Configure security options to protect your data in AWS, including backups.

- Restricting access to CloudTrail S3 buckets. Do not enable access to CloudTrail logs for any user or administrator account. The underlying logic for this is that they are always at risk of being exposed to phishing attacks. Limit access only to those who need the feature to do their work. Thus, you reduce the probability of unnecessary access.

- Encrypting CloudTrail log files. There are two requirements for decrypting CloudTrail log files at rest. First, decryption permission must be arranged by the Customer Master Keys policy. Second, permission to access the Amazon S3 buckets must be granted. Only those users with related job duties should receive both permissions.

- Encrypting the EBS database. Ensuring that the EBS database is encrypted provides an additional layer of protection. Note that this can only be done at the time of the creation of the EBS volume – encryption cannot be enabled later. Thus, if there are any unencrypted volumes, you must create new encrypted volumes and transfer your data from the unencrypted ones.

- Reducing ranges of open ports for EC2 security groups. Large ranges of open ports expose more vulnerabilities to attackers using port scanning.

- Configuring EC2 Security Groups to restrict access. Granting too many permissions to access EC2 instances should be avoided. Never allow large IP ranges to access EC2 instances. Instead, be specific and include only exact IP addresses in your access list. Follow AWS security group best practices.

- Avoiding the use of root user accounts. When you sign up for an AWS account, the email and password you use automatically become the root user account. The root user is the most privileged user in the system, enjoying access to all services and resources in your AWS account without exception. The best practice is to use this account only once when creating the first IAM user. Thereafter, you should keep the root user credentials in a secure place, locked away from access by everyone.

- Using secure SSL versions and ciphers. When making connections between the client and the Elastic Load Balancing (ELB) system, avoid using outdated versions of SSL versions or deprecated ciphers. These can create an insecure connection between the client and the load balancer.

- Encryption of Amazon Relational Database Service (RDS). Encrypting the Amazon RDS creates an extra layer of protection. It is recommended that you use AWS RDS security best practices.

- Avoiding access key use with root accounts. Create role-based accounts with limited permissions and access keys. Never use access keys with the root account as they are a sure way to open the account to compromise.

- Rotating SSH keys on a regular basis. Periodically rotate SSH keys. This AWS security best practice reduces the risks associated with employees accidentally sharing SSH keys, whether in error or through negligence.

- Minimizing the number of discrete security groups. Organizations should keep the number of discrete security groups as low as possible. This reduces the risk of misconfiguration, which can lead to account compromise and is one of the AWS security group best practices.

Inactive Entities Management

Managing inactive entities and deleting them is important as these types of entities can be used by third parties for unauthorized access.

- Minimizing the number of IAM groups. Deleting unused or stale IAM groups reduces the risk of accidentally provisioning new entities with older security configurations.

- Terminating unused access keys. AWS security best practices dictate that access keys that remain unused for over 30 days should be terminated. Keeping unused access keys around for longer inevitably increases the risk of a compromised account or insider threat.

- Disabling access for inactive IAM users. Similarly, you should disable accounts of IAM users who have not logged in for over 90 days. This reduces the likelihood of an abandoned or unused account being compromised.

- Deleting unused SSH Public Keys. Delete unused SSH Public Keys to decrease the risk of unauthorized access using SSH from unrestricted locations.

Access Restrictions

Restricting access is a category of AWS security best practices that allows you to minimize the probability of compromising the data stored in AWS and improves the security level.

- Restricting access to Amazon Machine Images (AMIs). Free access to your Amazon Machine Images (AMIs) makes them available in the Community AMIs. There, any member of the Community with an AWS account can use them to launch EC2 instances. AMIs often contain snapshots of organization-specific applications with configuration and app data. Carefully restricting access to AMIs is highly recommended.

- Restricting inbound access on uncommon ports. Restrict access on uncommon ports because they can become potential weak points for malicious activity (for example, brute-force attacks, hacking, DDoS attacks, etc.).

- Restricting access to EC2 security groups. Access to EC2 security groups should be restricted. This further helps prevent exposure to malicious activity.

- Restricting access to RDS instances. With RDS instance access, entities on the internet can establish a connection to your database. Unrestricted access exposes an organization to malicious activity such as SQL injections, brute-force attacks, or hacking.

- Restricting outbound access. Unrestricted outbound access from ports can expose an organization to cyber threats. You should allow access for specified entities only – for example, specific ports or specific destinations.

- Restricting access to well-known protocol ports. Access to well-known ports must be restricted. If you leave them uncontrolled, you open your organization up to unauthorized data access – for example, CIFS through port 445, FTP through port 20/21, MySQL through port 3306, etc.

AWS S3 Security Best Practices

As one of the most common cloud storage types to store backups, you should understand how Amazon S3 works and take into account AWS S3 security best practices.

- Enabling versioning. Versioning is used to keep multiple versions of an object in an S3 bucket after writing changes to the object. If undesired changes are made with objects in a bucket, you can recover any previous object version.

- Using immutable (WORM) storage. Amazon S3 supports the write-once-read-many (WORM) model to access data in S3 buckets. This approach allows you to protect data from deletion accidentally by users or intentionally by ransomware or other malware. Storing backups in immutable storage significantly improves AWS backup security.

- Blocking public Amazon S3 buckets. Block public access to S3 buckets to avoid unauthorized access to the data, including backups you store in AWS S3. You can configure this option at the account level and for individual buckets.

AWS EC2 Security Best Practices

Different AWS EC2 instances with EBS volumes are another way to store backups in the Amazon cloud. Consider these AWS EC2 security best practices to protect your backups created in the AWS EC2 cloud.

- Protecting access key pairs. Protect key pairs (public and private keys that are credentials in AWS EC2) generated to access Amazon EC2 instances. Anyone with access to these credentials and knowledge of the instance ID can access the instance and the data contained there. Also note that you can download this key only one time when creating an EC2 instance, and you don’t have a copy of a private key in AWS. Once downloaded, this key should be stored in a safe place.

- Installing updates. Install security updates and update drivers in a guest operating system such as Windows running on an Amazon EC2 instance.

- Using separate EBS volumes. An EBS volume is a virtual disk for an EC2 instance in AWS. Use separate EBS volumes for running an operating system and storing backups. Ensure that your EBS volume with backup data persists after EC2 instance termination.

- Configuring temporary access. Don’t place security keys in EC2 instances or AMIs for temporary access. If you need to provide temporary access, use temporary access credentials that are valid for a short time. Use instance roles.

AWS VPC Security Best Practices

Amazon Virtual Private Cloud (VPC) is a cloud environment that you can isolate with a specified network configuration. This logically isolated environment (non-public cloud) in AWS can be used to store backups with a higher security level.

To protect backups stored in VPC, read the AWS VPC security best practices listed below:

- Use multiple availability zones for high availability when adding subnets to VPC.

- Use network access control lists (ACLs) for controlling access to subnets.

- Use security groups to control traffic to EC2 instances in subnets.

- Use VC flow logs to view events generated in VPC.

- Separate your VPC environments such as Dev, Test, Backup, etc.

Other Best Practices for Cloud Data Security in AWS

There are other AWS security best practices that can be implemented for multiple categories at once:

- Using the unified security approach. Use the unified approach for security in the AWS cloud and local servers. The different policies for securing AWS and on-premises environments can cause a security gap in one of them. As a result, both environments become vulnerable as they are connected to each other over the network.

- Automating backup jobs. Use dedicated data protection software to back up data to AWS automatically. For the best AWS backup security, schedule backups and backup copies to run regularly to Amazon S3 buckets.

NAKIVO Backup & Replication is the universal solution that supports Amazon EC2 backup/replication and backup to Amazon S3:

- Incremental, consistent backups of Amazon EC2 instances

- Multiple automation features with policy-based backup/replication, advanced scheduling, and backup chaining to create automated backup copies in Amazon S3 from successful primary backups

- Integrated with S3 Object Lock to create immutable, ransomware-resilient backups in Amazon S3 buckets