The VMware vs KVM Hypervisors Comparison

Virtualization is widely used nowadays for the advantages it delivers: effective resource usage, scalability, and convenience. Hypervisors allow you to run multiple isolated environments called virtual machines (VMs) on one physical machine. With so many hypervisors now available, choosing the right one is key for successful deployment.

VMware and KVM hypervisors are both powerful solutions with interesting features. Not all ESXi alternatives can provide the same wide range of features as VMware’s product. In this blog post, we compare KVM vs VMware to help you understand the difference between these hypervisors and the advantages of each virtualization platform with a focus on VMware vSphere with the ESXi hypervisor and KVM for enterprise use.

Important: This post covers the feature sets of KVM on kernel version 5.4 with QEMU 6.1.0 and VMware ESXi version 7.0 and may become outdated with subsequent software updates.

What Is KVM

KVM, which stands for Kernel-based Virtual Machine, is an open-source hypervisor. The KVM hypervisor is used by large organizations including Amazon and Oracle (in the Oracle Cloud Infrastructure). Initially, KVM was developed by Qumranet and later Red Hat bought the rights to it. Oracle also distributes KVM. KVM provides full virtualization capabilities, and each VM simulates a physical machine completely by using a processor and virtual devices.

Notes:

- Don’t confuse the KVM hypervisor with the KVM (the acronym for keyboard, video, and mouse) switch used for connecting to multiple computers by using one monitor, one keyboard, and one mouse. A KVM splitter/multiplexer is a physical device that is not related to virtualization.

- The vmware-kvm.exe file in the directory of VMware Workstation installed on Windows is not related to the KVM hypervisor. This vmware-kvm.exe application works as a virtual KVM switch for connecting to the interface of guest operating systems (OSs) installed on VMware VMs.

What Is VMware

VMware is the name of the company behind the ESXi hypervisor, which is offered as part of the VMware vSphere virtualization platform, and host hypervisors such as VMware Workstation, Player, and Fusion. VMware hypervisors are proprietary, and many of its virtualization features are considered as the industry standard for heavily loaded production environments.

Hypervisor Types

There are two types of hypervisors:

- Type 1 hypervisors are installed directly on hardware and are also called bare-metal hypervisors.

- Type 2 hypervisors are installed on the underlying operating system.

Type 1 hypervisors provide a higher level of performance as there is no overhead from the interaction of the hypervisor with the guest OS.

Full virtualization vs. hardware-assisted virtualization

Full virtualization or software-assisted full virtualization is a technique that uses a hypervisor to completely emulate all underlying hardware (virtual devices) for a VM without using central processing unit (CPU) virtualization features. Binary translation and the direct approach are used. Software-assisted virtualization is slower and can work on computers whose processors don’t support hardware-assisted virtualization. VMware Workstation 7 and earlier versions for running 32-bit guests are examples of this.

Hardware-assisted virtualization or native virtualization is a full virtualization technology related to CPU features such as Intel VT-X and AMD-V. Direct execution and binary translation techniques are used. KVM, VMware ESXi, VMware Workstation, VMware Player, and VMware Fusion require Intel VT-X or AMD-V features enabled in UEFI/BIOS on a physical machine to use this virtualization technology. Hardware-assisted virtualization ensures that virtual machines have high performance because “part” of the physical CPU is mapped directly to the virtual CPU (vCPU), and there is no overhead to translate instructions from a vCPU to CPU.

VMware hypervisors

VMware ESXi is a type 1 hypervisor and is installed on physical hardware (on dedicated servers) to act as an operating system. VMware ESXi is the main component of the VMware vSphere virtual environment. It has its own kernel that interacts with a physical processor and other devices.

VMware Workstation, VMware Player, and VMware Fusion are type 2 hypervisors and are installed on the underlying operating system (OS) for individual use. VMware Workstation and Player are installed on Linux and Windows while VMware Fusion is installed on macOS.

KVM hypervisors

KVM is installed on Linux as a kernel module, but is still a type 1 hypervisor. KVM has everything Linux has because KVM is a part of Linux.

KVM consists of loadable kernel modules such as the kvm.ko module, which provides the core virtualization infrastructure, and the processor specific kvm-intel.ko or kvm-amd.ko modules, which are installed depending on the processor used (Intel or AMD). The first version of the KVM hypervisor was available for the Linux kernel 2.26.

KVM works in conjunction with QEMU for I/O emulation. The advantages of running the KVM hypervisor as a kernel module include allowing you to work with memory (RAM), CPU scheduler, etc. These are also available for guests (VMs). However, these conditions are not enough, because a guest operating system on a VM needs input/output (I/O) emulation (a processor, disks, network, video, USB, PCI ports, etc.), and QEMU is used for that.

Note:QEMU (Quick Emulation) is open-source software for hardware virtualization (don’t confuse it with hardware-assisted virtualization) that emulates virtual devices such as a disk controller, network adapter, PCI and USB devices, etc. for VMs. Dynamic binary translation can also be used to emulate CPUs for VMs. QEMU can act as a type 2 hypervisor on workstation computers running Linux even on old processors (which don’t support Intel VT-X or AMD-V) due to support for software-assisted full virtualization and the use of binary translation. QEMU doesn’t support native (hardware-assisted) virtualization itself.

Complexity of Deployment

KVM can be installed on almost any computer running Linux with a processor that supports hardware-assisted virtualization features. KVM hypervisor installation includes installing the required packages, KVM (kvm-qemu), and a management tool (optional). The packages are available in Linux software repositories.

For example, you can install KVM on Ubuntu with this command:

sudo apt -y install bridge-utils cpu-checker libvirt-clients libvirt-daemon qemu qemu-kvm

libvirt is a set of libraries for virtualization management.

virt-install is a set of commands for managing KVM VMs in the Linux console (CLI).

Administration in KVM is more complicated than it is with VMware vSphere. You may need to install and configure additional components for KVM manually (for example, a virtual switch). The latest hardware updates are quickly adopted in a Linux kernel by developers because hardware vendors contribute to kernel development.

VMware ESXi is installed from a live CD or USB flash drive after burning the ISO image on the medium. A user-friendly installation wizard is provided to complete the ESXi installation. Deploying VMware vCenter can be a little bit tricky because you need to configure DNS. New vCenter versions are provided as vCenter Server Appliance, which is a virtual machine image ready for deployment on ESXi with a list of customizable parameters. Installing vCenter on Windows is not supported in vSphere 7.0 and higher.

Unlike KVM, VMware ESXi has a hardware compatibility list (HCL). This means that certain hardware may not be supported for ESXi installation. In addition, a VMware recommendation can raise the price of hardware, thus increasing costs for VMware vSphere deployment.

Management Interface

The convenience of using a hypervisor depends on the user interface provided. Both KVM and VMware vSphere virtualization solutions provide a command line interface (CLI) and a graphical user interface (GUI). Let’s look at the VMware vs KVM options in detail for each platform.

KVM

Virsh is the command line utility available by default for KVM management. Virsh uses the libvirt API. You can manually install additional tools such as the virt-manager package.

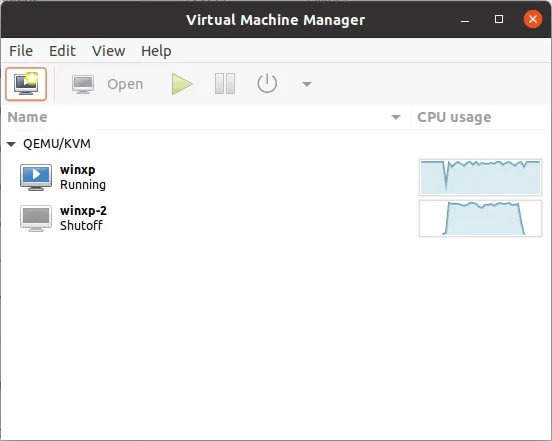

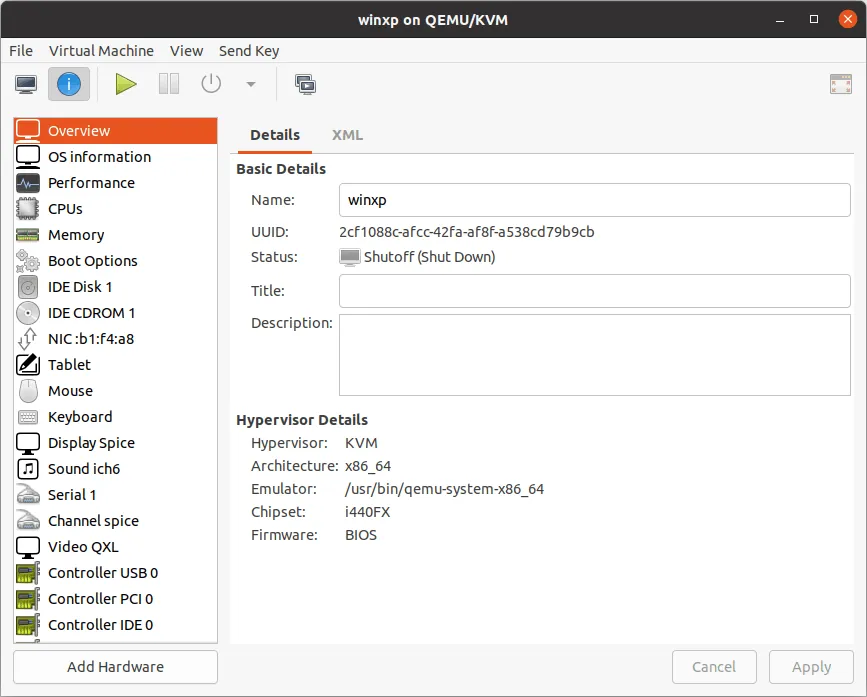

Virtual Machine Manager (virt-manager) is a desktop (graphical) user interface to manage KVM VMs that uses libvirt. You can connect to multiple KVM servers in Virtual Machine Manager to manage them.

You can select VMs, open VM screens, edit VM settings, clone VMs, and migrate VMs in Virtual Machine Manager.

Xming and PuTTY with X11 forwarding enabled are used for connecting to virt-manager from Windows machines remotely. There is the option to use VNC for connecting to KVM virtual machines. You can connect to a Linux machine running KVM with an SSH client and execute commands to manage KVM and related components.

VMware vSphere

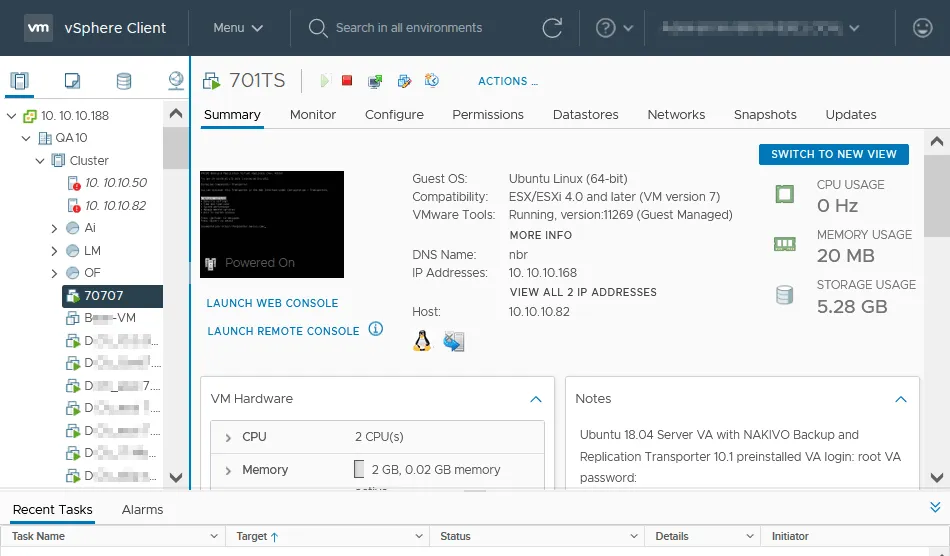

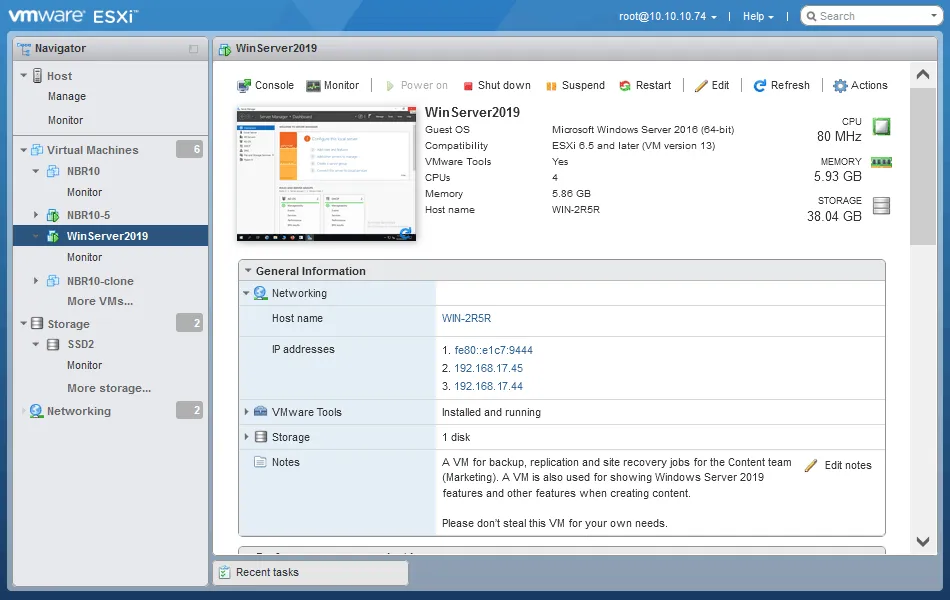

VMware vCenter Server is a centralized management system in VMware vSphere. VMware vCenter has a web interface to manage ESXi hosts, virtual machines, and configure other components. This interface is called VMware vSphere Client. To manage a standalone ESXi host, use the web interface of VMware Host Client. You can use any operating system with a supported web browser to manage vSphere with the GUI. The interface of VMware vSphere Client is displayed in the screenshot below.

Below you can see the web interface of VMware Host Client.

There is also the command line interface such as ESXCLI to manage standalone ESXi hosts. You can enable SSH access and connect to ESXi hosts by using an SSH client. VMware vSphere PowerCLI based on PowerShell cmdlets can be used to manage ESXi hosts and vCenter servers remotely from Windows. You can also use VMware Workstation and VMware Remote Console to manage VMs on ESXi hosts.

KVM vs VMware Performance

KVM is compiled from about 10,000 lines of well-optimized code, thereby maximizing performance.

The source code of VMware ESXi is closed, but software researchers estimate that the product contains about 60 million lines of code. Sometimes VM performance on ESXi can be slightly slower, but this difference is not significant for typical workloads.

In general, performance for both of these type 1 hypervisors is very good.

Security

KVM. Security-enhanced Linux (SELinux) and secure virtualization (sVirt) are available for Red Hat KVM distributions. A combination of these features is used to detect and prevent threats, and ensure VM security and isolation. Oracle KVM is released with Ksplice.

KVM hypervisor security features include:

- Mandatory Access Control security between VMs

- iptables (the firewall for Linux) configuration for improved security

- UEFI Secure Boot for Windows guests available after manual configuration

- VM data protection with Total Memory Encryption (TME) and Multi-Key Total Memory Encryption (MKTME)

VM encryption options. You can store VM files on a file system encrypted by the host and enable encryption inside a guest OS. KVM can encrypt VM images in the QCOW2 format with the Advanced Encryption Standard (AES) algorithm transparently for a guest (128-bit encryption keys are used).

VMware supports a wide list of security tools with Compliance and Cyber Risk Solutions to help meet compliance requirements under HIPAA, CJIS, and PCI DSS.

VMware vSphere security features include:

- Permissions and user management for configuring roles and user access

- Host security features, including host smart card authentication, vSphere Authentication Proxy, UEFI Secure Boot, Trusted Platform Module, VMware vSphere Trust Authority

- Virtual machine encryption, including KMS and VMware vSphere Native Key Provider

- Guest OS security, including virtualization-based security and using a virtual trusted platform module (vTPM)

Costs, Licensing, and Support

KVM is primarily an open-source hypervisor and is distributed for free without the need to pay for a license. If you use a free KVM hypervisor, technical support is not provided. However, the wide KVM community and resources on the internet can help you fix issues and find answers. There are paid versions of the KVM hypervisor. Red Hat and Oracle are official supported vendors, and they offer technical support with their distributions.

VMware vSphere is a commercial solution, and you need to buy licenses to use its components. A VMware vSphere license contains a license for ESXi and vCenter. There are VMware vSphere Standard and Enterprise Plus licenses, with the latter giving access to more features. The number of licenses required is based on the number of processors and cores. There is a free 60-day trial period for VMware vSphere.

If you use VMware products such as VMware Tanzu, VMware NSX, VMware vSAN, VMware Horizon, and VMware vRealize in vSphere, you must buy additional licenses for these solutions. When using VMware vSphere, you are limited to the Enterprise License Agreement. Enterprise-level support is provided when you buy a commercial VMware license.

There is the ESXi Free Edition with a set of limitations, one of which is the ability to use APIs only in the read-only mode. The free version doesn’t allow you to perform VMware VM backup with automated backup solutions. You can get the key for Free ESXi (the license is called VMware vSphere Hypervisor) after registering on the VMware website. You cannot manage hosts with free ESXi in vCenter and connect them in a cluster or request technical support.

In summary, the KVM open source hypervisor requires less cost for deployment and is the winner in the price category of this KVM vs VMware comparison.

Updates and Upgrades

KVM is updated regularly and you can update or upgrade the KVM hypervisor by running a few commands.

VMware regularly releases patches and bug fixes for VMware vSphere. The latest examples of version updates are vSphere 7.0 Update 1, vSphere 7.0 Update 2, and vSphere 7.0 Update 3 (which was recalled in November 2021 because of driver issues). VMware provides vSphere Update Manager to manage and install updates on multiple ESXi hosts managed by vCenter.

Scalability and Limits

VMware provides application programming interfaces (APIs) for vSphere to make VM management and VM backup easier by creating or deploying additional software solutions working with vSphere and VMs.

The source code of the KVM hypervisor is open, which allows you to integrate or scale this hypervisor with other software solutions in your infrastructure.

Nested virtualization is supported by both ESXi and KVM hypervisors.

KVM limitations

KVM host limits:

- 384 CPU cores

- 6 TB of RAM

- 600 VMs running concurrently on a host (depending on the host performance)

VM limits:

- Virtual CPUs: 256 (384 RH8)

- Virtual RAM: 2 TB (6TB RH8)

- Virtual Network interface controllers (NICs): 8

Red Hat Virtualization Manager supports up to 400 hosts.

Oracle Linux Virtualization Manager host supports up to 128 hosts by one engine.

Limits can vary depending on the Linux distribution (SLES, Red Hat, Oracle Linux). The maximum limits are defined after testing by the appropriate vendor and mean that this maximum configuration can work properly. Check the documentation for the appropriate distribution to check the limits more precisely.

VMware vSphere limitations

ESXi host limits:

- 896 logical CPUs per host

- 24 TB of RAM per host

- 1024 VMs per host

- 4096 virtual CPUs per host

VM limits:

- Virtual CPUs per VM: 256

- Virtual RAM: 6128 GB

- Virtual NICs: 10

- Virtual disk size: 62 TB

vCenter limits:

- 2,500 ESXi hosts per vCenter instance

- 64 hosts per cluster

- 8,000 VMs per cluster

- 40,000 Powered on VMs

Integration

If you use VMware ESXi, you need to use other products from the VMware vSphere platform to get the needed features. There are no such restrictions for KVM because the KVM hypervisor is open source and is compatible with other open-source and commercial solutions. You can integrate KVM with everything you need, including your existing infrastructure operating with Linux and Windows platforms. There is no vendor lock-in.

KVM uses an agent installed on a host to communicate with the physical hardware of the host.

VMware ESXi uses the VMware management plane for this purpose. This approach allows ESXi to access other VMware products using this management plane but the VMware’s control stack is required.

Active Directory

Integration with Microsoft Active Directory for user authentication is supported in VMware vSphere. This feature allows users to log in to VMware vSphere Client by using Active Directory accounts.

KVM runs on Linux machines. You can add Linux machines to an Active Directory domain. Configuration is more complicated compared to VMware vSphere.

Integration of KVM with OpenStack is excellent. The KVM hypervisor is classified as Group A with OpenStack (the maximum compatibility). Linux developers tend to prefer using KVM.

VMware vSphere is supported by the OpenStack product family. The VMware hypervisor is classified as Group B with OpenStack.

Storage

KVM uses any storage supported by Linux on the physical and logical levels. This means that you can use both SAS and SATA drives, LVM volumes, NFS and iSCSI shares, etc. Shared storage can be configured on a Linux server or Network Attached Storage (NAS).

KVM virtual machines support the use of virtual disk images and Raw Device Mapping (Virtio-scsi Passthrough). You can attach an LVM volume to a KVM VM. KVM supports VMware virtual disk images.

VMware supports local storage only on SAS disk drives formatted with the VMFS file system. SATA disk drives are connected as remote storage.

VMware vSphere supports a variety of Storage Area Network (SAN) vendors.

vSphere provides storage features such as vSAN, vVols, and Raw Device Mapping (RDM).

Disk image formats

Native disk image formats and file extensions are different for VMware and KVM hypervisors.

KVM image formats

KVM supports many virtual disk file formats, but the native formats are raw and qcow2.

- Raw is the simplest format without snapshot support. It uses the img file extension. If a file system on the underlying disk supports holes (for example, ext2, ext3), then only the written blocks of the virtual disk occupy space on the physical disk. Blocks belonging to the deleted files still occupy storage space. The raw format is a bit-to-bit image that is about 10% faster than If you back up a VM using a raw virtual disk, incremental backup is not supported, and only full backup is available. *.img is the file format of raw disk images.

- qcow2 is a QEMU virtual disk image format and provides the best set of features for KVM VMs. QCOW2 (QEMU Copy On Write) supports zlib-based compression, Optional AES encryption, and multiple snapshots. Virtual disk image files can be smaller on file systems that don’t support holes (performance can degrade if holes are not supported for saving storage space). As a result, this copy-on-write format supports thin provisioning. TRIM/UNMAP is supported. You can reclaim (UNMAP) unused disk space with the virt-sparsify command line tool. *.qcow2 is the file format of qcow2 disk images.

Note: If you create a snapshot of a qcow2 virtual disk in virt-manager, a new snapshot file is not created. The snapshot is created inside the qcow2 file, which is not convenient. You can use the qemu-img create or virsh snapshot-create-as command to create a snapshot as a new file.

- VMDK is a VMware virtual disk image format. You can use this format if you plan to migrate a VM between VMware and KVM hypervisors. VMware hypervisors don’t support native KVM virtual disk images (raw and qcow2).

- VDI is the native Oracle VirtualBox virtual disk file format.

- VHDX is used by Hyper-V VMs. Read about the difference between VHD and VHDX.

KVM also supports dmg, parallels, vvfat, qed, qcow, cow, nbd, cloop, bochs disk image formats for higher compatibility.

VMware image formats

VMware ESXi supports only the VMDK virtual disk file format. A VMware ESXi virtual disk consists of a *.vmdk descriptor file and *-flat.vmdk virtual disk data file. Thin provisioning and automatic UNMAP (free space reclamation) are supported.

VMware hypervisors don’t support KVM virtual disk images. You need to convert a KVM virtual disk image to the VMware VMDK format with the qemu-img command line tool for migrating a virtual disk to a VMware hypervisor. You can use the cross-platform recovery feature to convert supported virtual disk formats for VM migration between hypervisors by using a VM backup.

It is recommended that you protect data stored on virtual disks regardless of the format used. For further information, read this white paper about data protection trends in 2023.

KVM vs VMware Networking

Virtualizing networks and connecting VMs by using virtual networks to physical networks is required for virtual datacenter operation. Virtual network adapters and virtual switches are other requirements for VM network connection.

KVM

- Open vSwitch (OVS) is the open-source implementation of a virtual switch that can be used with KVM. Various bridging modes are supported including a private virtual bridge and public virtual bridge. Distribution across physical servers is supported similarly to a Distributed Virtual Switch in VMware vSphere.

- You can configure NIC bonding and NIC teaming to ensure link redundancy or link aggregation if you have two or more network interfaces on a Linux server running a KVM hypervisor. Configuration is performed in the command line interface manually. NIC bonding allows you to configure multiple network links to work as one and sum their bandwidth. For example, two 1-Gbit network adapters work as a single 2-Gbit network adapter (they make a single fat pipe).

NIC teaming allows you to configure failover and load balancing. If you configure two 1-Gbit network adapters to use NIC teaming, you are not getting the 2-Gbit throughput when transferring data to/from another computer. However, if two computers with 1-Gbit network speed are connecting to your server, then each of the connected servers can utilize the 1-GB bandwidth.

- The virtio network interface supports VLANs. You can use livirt, which is an open-source virtualization API, to manage networking functionality. There is a DHCP server service integrated in QEMU. You can configure VXLAN networks on Linux machines with KVM. The Linux variant on which you run KVM provides extra wide firewalling options if you configure iptables.

VMware vSphere

VMware vSphere has two types of virtual switches: the standard vSwitch and Distributed Virtual Switch. A standard vSwitch is configured on each ESXi host individually. A distributed vSwitch is configured in vCenter centrally and configuration is applied to all virtual switches on all selected ESXi hosts.

VLAN is supported in VMware virtual switches. If you want to configure VXLAN, you need to use VMware NSX. VMware provides the NSX solution for software-defined networking, which provides powerful network virtualization options.

VMware ESXi in vSphere supports link aggregation (NIC teaming) for network load balancing and failover. You can configure link aggregation in the web interface of VMware vSphere Client or VMware Host Client in a few steps. VMware ESXi includes a built-in firewall with basic options.

VM Migration

KVM supports VM live migration between KVM hosts, allowing you to migrate running VMs without service interruption. During a live migration, the VM is powered on, the network is working, and applications are running. Note that the VM files must be stored on shared storage.

KVM supports storage migration when you need to migrate VM files stored on one KVM host to another KVM host (shared storage is not required in this case). KVM developers are planning to implement KVM storage live migration soon.

VMware vSphere has the vMotion feature for performing VM live migration between ESXi hosts.

VMware supports VM storage live migration. VMware vSphere Storage vMotion allows you to migrate VM files between ESXi hosts and their datastores even if a VM is running.

Clustering Features

KVM clustering features are limited. One of the options to create a high availability cluster is to use DRBD network replication, which supports only two nodes that are synchronized without encryption. Configuring an encrypted VPN connection can protect data transferred over the network. KVM doesn’t have built-in tools such as Fault Tolerance. A Heartbeat application is required for exchanging service messages about a cluster state within the cluster. Pacemaker is a cluster resource manager.

VM Load balancing is not available out of the box. oVirt and commercial third-party software with multiple modules are solutions for load balancing. Configuring load balancing for KVM is not easy and requires a lot of manual operations. oVirt virtualization manager requires buying a subscription for technical support that can be used to deploy and manage a cluster for KVM VMs.

VMware provides great clustering features such as High Availability (HA) and Distributed Resource Scheduler (DRS). If you use a HA cluster, you can configure Fault Tolerance for multiple VMs. There is Distributed Power Manager, which enables you to save power if there are unused resources within a cluster and VMs don’t need additional computing resources. Configuring VMware clustering features is straightforward and can be done in the user-friendly graphical interface of VMware vSphere Client. There are many clustering options in VMware vSphere. There is an ebook available about VMware clustering.

VMware is the winner in the clustering category of the VMware vs KVM comparison.

Guest OS Support

VMware ESXi and KVM hypervisors support many families of operating systems that can run on virtual machines including:

- Windows (starting from old ones like Windows 95 and Windows NT)

- Linux, including distributions like Ubuntu, Debian, OpenSUSE, Red Heat, CentOS, Fedora, Oracle Linux, Kali Linux, etc., as well as Free BSD and other BSD-based OSs

- Solaris, OpenSolaris, Novell Netware, and MS-DOS

- MacOS can be installed on ESXi but with additional steps such as installing patches. If you want to install macOS on KVM, you need to install the required packages on the Linux host.

Guest OS support is almost identical.

Adaptation for Containers

The popularity of containers is growing, and container support is the desired option in virtualized datacenters.

KVM

Running containers using the KVM/Libvirt provider allows you to run the Docker engine on a Docker machine (VM) transparently without manually configuring the VM. The KVM driver to run containers can be installed by downloading the docker-machine-driver-kvm package.

Note: A similar driver is also available for VirtualBox and Hyper-V.

Running containers by using the Docker/KVM driver allows you to get better isolation of the containers from the host machine and improve performance compared to creating VMs manually with containers inside the VMs. You can just run the docker-machine create command to create additional local machines. A lightweight Linux distribution with the Docker daemon installer is usually used (for example, boot2docker). The virsh tool is used to view libvirt resources. The docker-machine ls command is used to list the available Docker machines. You can also deploy Kubernetes on machines running the KVM hypervisor.

VMware vSphere

VMware Integrated Containers. This feature is used to run containers as VMs on ESXi hosts. A virtualized vSphere infrastructure and clustering is a reliable platform to run containers. VMware vSphere networks are used as networks for Docker containers and are displayed in the Docker client. The lightweight Photon Linux VM, designed to be “just a kernel”, boots from an ISO image to run containers. You can manage container VMs in VMware vSphere Client.

The major components are vSphere Integrated Containers Engine and vSphere Integrated Containers Registry. These components support Docker images. Container images are located on VMFS storage, and VMware vSphere is the control plane. However, deploying containers as VMs is not optimal when compared to deploying containers on the newer VMware Tanzu platform.

VMware Tanzu is the newest service to run containers in VMware vSphere. This service is the successor of VMware Integrated Containers and has more advantages. VMware Tanzu is a part of ESXi, and containers can run directly on an ESXi host more efficiently. Kubernetes deployment is supported. VMware vSphere Tanzu creates a control plane inside the ESXi hypervisor layer. The major components of Tanzu are Tanzu Runtime Services and Hybrid Infrastructure Services.

You can manage Tanzu and containers in vCenter and VMware vSphere Client. The traditional kubectl tool is also available to manage Kubernetes. It is recommended that you deploy VMware NSX-T to use vSphere Pods, Embedded Harbor Registry, and network load balancer. However, you can use standard and distributed virtual switches to configure networks for containers without the advanced features. A High Availability cluster that uses shared storage is the optimal solution for running Tanzu with containers in VMware vSphere. Spherelet agents are installed on ESXi hosts and make them operate as Kubernetes worker nodes.

VMware vSphere administrators can provide access to DevOps and other users to create and configure containers using vSphere Integrated Containers and VMware Tanzu. There is a service portal for users. VMware Tanzu requires a license for one of the available Tanzu editions.

Data Backup

In terms of the KVM vs VMware hypervisor comparison, these two virtualization platforms are not identical in backup configuration. It is better to plan the backup process based on requirements such as backup time, automation, service interruption (downtime), RTO and RPO before selecting the virtualization platform. Take into account the backup options for the hypervisor you are going to deploy in your infrastructure.

KVM backup

Basic methods to back up KVM VMs are using virsh, which is the command line Linux tool for KVM. When using virsh, the VM must be powered off. You can use commands and scripts to back up running VMs and automate the backup process. Cron is used for running scripts based on a schedule. Backing up running VMs requires using snapshots. A snapshot fixes data in a consistent state at the moment of snapshot creation and allows you to make a copy of consistent data when a VM is running. Snapshot and backup are not synonymous. Let me cover the methods for KVM VM backup.

- LVM volumes attached to a VM. You can use the native LVM snapshot functionality and back up VM data. The advantages are simplicity and high performance. The disadvantages are difficult management, availability of migration to another KVM host, and less flexibility for storage space management of VM storage.

- RAW (IMG) virtual disk images. Snapshots are not supported. Only backup of powered-off VMs is supported.

- QCOW virtual disk images. Snapshots are supported and it means that you can back up running VMs.

To create a snapshot of a running VM, a QEMU Guest Agent must be installed on a guest OS, and Channel Device with the org.qemu.guest_agent.0 name must be set in the VM configuration. You can consider applications using the Backup and Restore API for KVM backup. Incremental backup capabilities are based on libvirt and oVirt functionality.

KVM replication can be performed with Distributed Replicated Block Device (DRBD), which is a part of a Linux kernel. VM replication is synchronous in this case.

VMware vSphere backup

VMware vSphere provides powerful data protection functionality including backup and replication of virtual machines. VMware vSphere APIs for data protection (vStorage API for Data Protection) allow you to back up running VMs on the host level by using snapshots and data quiescing to preserve data in a consistent state. Scripts and manual backup of powered-off VMs can be used on Free ESXi because APIs for data protection are disabled in this case.

You need to install VMware Tools on a guest OS to quiesce data correctly when taking a snapshot. A new snapshot virtual disk file is created on a datastore attached to the ESXi host.

VMware vSphere supports Changed Block Tracking to track changed blocks for incremental backup. Incremental backup lets you save storage space by copying only the data that has changed since the previous backup operation.

In the category of VM backup, the winner is VMware vSphere in this VMware vs KVM hypervisor comparison.

Selecting a backup solution

A good backup solution should be designed to make the most of VMware’s native features like change tracking and quiescing. NAKIVO Backup & Replication is an agentless data protection solution for VMware VMs running in vSphere. NAKIVO Backup & Replication supports advanced functionality for data protection including:

- Incremental, app-aware backup of running VMs

- Backup of VMs in a cluster

- Advanced retention settings

- Instant VM recovery and P2V recovery

- Instant granular recovery for files and app objects

- Immutable repositories in local and cloud storage

KVM vs VMware – Comparison Table

Let’s sum up the main points of the KVM vs VMware hypervisors comparison into a table before drawing conclusions.

| Feature | KVM | VMware vSphere |

| Hypervisor Type | Type 1 | Type 1 |

| Deployment Complexity | Difficult | Easy |

| Storage | All types of storage supported in Linux | SAS disks for local storage. VMFS, iSCSI, NFS datastores |

| vSAN, vVols | No | Yes |

| Native virtual disk format | RAW(IMG), QCOW2 | VMDK |

| Raw Device Mapping | Yes. LVM is supported | Yes |

| Thin provisioning | Yes | Yes |

| Native file systems | Linux file systems, NFS | VMFS, NFS |

| VM snapshots | Yes | Yes |

| VM live migration | Yes | Yes |

| VM storage migration | Yes | Yes |

| VM Live storage migration | No | Yes |

| Clustering features | Yes (limited) | Yes (wide support) |

| High availability | Yes, with DRBD | Yes |

| Load balancing | Limited | Yes (DRS) |

| Fault Tolerance | No | Yes |

| Management interface | Command line (virsh), KVM virt-manager | vSphere Client, Host Client, ESXCLI, PowerCLI |

| AD integration | Yes | Yes |

| Price | Free/Low (pay only for tech support) | High |

| Performance | High | High |

| VM Backup options | Limited | Wide |

| Tech support | Oracle KVM, Red Hat KVM | Yes |

| Supported guest OSs | Wide | Wide |

| Networking | Virtual switch, Distributed switching, NIC bonding, link aggregation | vSwitch, Distributed vSwitch, NIC Teaming and link aggregation, NSX |

| Firewall | Wide Linux functionality with iptables | Basic ESXi firewall or additional functionality of NSX |

| Container Integration | Yes | Yes |

| VDI | Yes, with OpenStack | Yes, with VMware Horizon |

| Nested Virtualization | Yes | Yes |

| VM Linked Clones | Yes | Yes |

Conclusion

When looking at a KVM vs VMware hypervisors comparison, each has its pros and cons depending on your needs and the use case. However, if you are using VMs to run critical applications and store critical data, note that backup options for KVM virtual machines are limited. Backing up VMs in the running state can be a challenge. VMware vSphere provides APIs for data protection that allows developers to create functional backup solutions to protect VMs on a host level. If you use VMware vSphere, download NAKIVO Backup & Replication for free to protect your VMs.