The Differences Between iSCSI, SAS and FC Protocols

There are three main storage networking technologies used in enterprises each with its advantages and disadvantages. In this post we’ll compare the FC, SAS and iSCSI storage protocols to understand the best uses of each in a VMware vSphere environment. However, you can use this information to install storage in other IT infrastructures too.

FC vs SAS vs iSCSI: Technologies Comparison

The common technique for increasing redundancy, high availability, and load efficiency for a vSphere environment is to configure ESXi hosts in a vSphere cluster. Creating VMware shared storage is one of the most important requirements for clusters. There are several ways to create a shared storage:

- SAS interfaces on storage servers and an ESXi host

- Fibre Channel (FC)

- iSCSI

- Virtual SAN (vSAN)

In VMware, vSAN is included and can be set up via vSphere Client, while the other three require additional software/hardware to set up. Let’s take a look at the difference between iSCSI and SAS as well as compare FC to the other approaches to understand the different aspects of these technologies.

- Fibre Channel is the ultimate solution for storage systems used for mission-critical applications that require high performance, availability, and reliability in large organizations. Note the high price for such a solution.

- SAS is the more affordable technology, and SAS-based solutions are widely used in enterprises when reliability, high availability, and performance are a priority.

- iSCSI is the most affordable solution of the three and can be used with an existing infrastructure when the budget is limited.

FC vs SAS

Both these mature technologies provide a high level of performance, reliability, and availability. However, Fibre Channel provides slightly higher performance for data transfers.

- SAS has a better performance-to-price ratio and is optimal for enterprise storage.

- FC storage networks are widely used in SAN for very large amounts of data in enterprise environments.

- SAS disks can be used in FC networks using protocol bridging to handle SAS translation to disk drives.

- SAS storage is the optimal choice if storage is located in one rack or one room with a server (directly attached storage).

When the infrastructure grows and amounts of SAS storage are insufficient, you can consider using Fibre Channel SAN storage, as it provides a higher level of scalability.

SAS vs iSCSI

SAS is the interface to connect disk devices using SCSI commands, while iSCSI is a protocol to encapsulate SCSI commands using underlying TCP/IP networks. Using SAS drives in servers provides higher performance and reliability for a reasonable price. iSCSI allows you to use even SATA disk drives on servers used for shared storage.

FC vs iSCSI

Fibre Channel is the top solution using its own standards for networking to carry SCSI commands to the disk drives in a storage area network (SAN). iSCSI can be used to connect SAN (LUNs) as the alternative in cases when the deciding factors are low cost, moderate performance, and sufficient scalability. The Ethernet network used for iSCSI is universal and common, but it is not primarily focused on transferring storage traffic. Thus, Fibre Channel is the winner in the performance category.

Let’s summarize the main parameters of all the technologies in this FC vs SAS vs iSCSI table.

| SAS | FC | iSCSI | |

| Description | A serial interface for disk devices using SCSI commands | A set of standards (including networking) to transfer incorporated SCSI commands | A network protocol to encapsulate SCSI commands using existing TCP/IP networks |

| Architecture | Serial, point-to-point | Switched, support for multiple concurrent transactions | Using the standard OSI model for Ethernet networks. |

| Performance | High | Very High | Medium |

| Ease of use | Easy | Hard | Medium |

| Flexibility/scalability | Middle | High | High |

| Max number of devices | Varies (256 or 65535) | 256 devices, 16 million in a switch fabric | Unlimited |

| Max distance between devices | 10 meters | 30 meters (copper)

50 km (optic) |

Depends on the underlying infrastructure |

| Costs | Medium | High | Low |

| Target market | Small, Medium, Large businesses | Medium and large enterprises | Small and medium businesses |

| Support in vSphere | Yes | Yes | Yes |

VMware Shared Storage Approach Comparison

Here’s a brief comparison table of the approaches for creating a VMware vSphere shared storage, including vSAN.

| Approach | Additional Hardware | Additional Software | Dedicated Server | Management Complexity |

| SAS | SAS adapters | Yes | Yes | Medium |

| Fiber Channel | FC-controller, HBA, FC-switches | Yes | Yes | Dedicated admin needed |

| iSCSI | No | Yes | Yes | Specific server configuration needed |

| vSAN | No | No | No | Configured via vSphere Client |

Storage Technologies Overview

Let’s take a more detailed look at each of the approaches for creating a VMware shared storage.

What is SAS?

SAS, or Serial Attached SCSI, is an interface standard widely used in servers to attach disk drives, DVD drives, and tape drives. SAS is widely used for direct attached storage (DAS) in servers such as ESXi hosts and in servers configured as shared storage to be accessible over the network (storage servers).

SAS, a successor of SCSI (parallel SCSI), operates with SCSI (Small Computer System Interface) commands, which was optimized for higher efficiency. A SAS controller supports attaching SAS and SATA disk drives. This is a reliable storage interface standard, which has been used for years and greatly enhanced during this time.

- Components. A SAS system includes 3 main components:

- Initiator – part of the host computer to which SAS disk drives are connected

- Target – a disk device, which contains the logical units, connected to a host computer, which is called an initiator in this case

- Service delivery subsystem – includes equipment such as cables to connect an initiator to a target

- Performance. SAS allows you to combine multiple high-speed physical links into a single faster port to increase the bandwidth between these links and the controller. SAS 3 provides an interface speed of 12 Gbit/s, SAS 4 – 22.5 Gbit/s and SAS 5, which is under development, is expected to provide 45 Gbit/s. In practice, the speed depends on the type of SAS disk drive connected, which can be HDD or SSD.

- Flexibility. SAS storage controllers, also called SAS host bus adapters, must be installed on servers. A SAS controller is a board (circuit) installed in the PCI-E slot (PCI slots were used before). A computer motherboard has a finite number of PCI-E slots, and a SAS controller has a finite number of SAS ports. You can install expanders (edge and fanout expanders) to increase the number of SAS devices addressed to the SAS controller. The maximum cable length is up to 10 meters. You should take these possibilities and limitations into account when planning a scalable storage system.

- Ease of Use. Installing a SAS storage subsystem is straightforward for directly attached storage. You need to install SAS storage controllers, which can be SAS RAID controllers, and attach disks. SAS expanders can be used to configure SAN with SAS disks. Fibre Channel can be used then to transfer data to an external network such as SAN.

- Costs. Installing a SAS storage system is affordable for enterprises, and this is an advantage of SAS.

The SAS standard is an approach that requires hardware SAS interfaces on both the server and client sides. This technology provides speeds of up to 22.5 Gbit/s with SAS 4 (as mentioned above, SAS 5 is in development), but it has several limitations.

- A SAS infrastructure is not scalable because of the finite number of SAS ports on the storage server. However, if you need more storage, you can replace disks with larger ones or install an additional storage server.

- The storage server and disks must be mounted in the same rack because of limitations on cable length. Thus, this approach can work well for small-to-medium environments with high data transfer speed demands but not for very large ones.

What is Fibre Channel?

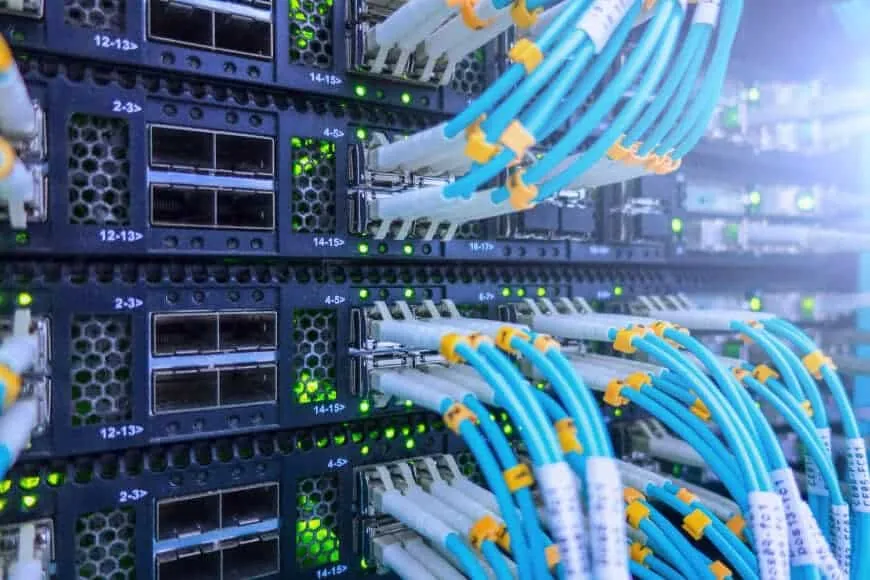

Fibre Channel (FC) is an interconnect technology for high-performance storage systems that include disks and network devices. FC supports transferring SCSI data between devices without translating this data.

- Architecture. The Fibre Channel standard architecture has 5 layers and differs from the OSI model used for Ethernet networks:

- FC-0 is the physical layer and includes data cables, connectors, and signal passing in this environment for data control.

- FC-1 is the transmission protocol layer that is responsible for data encoding and decoding, data synchronization, maintenance of links, and error detection.

- FC-2 is the framing and signaling protocol layer. It defines the structure and organization of the transferred data and is responsible for data sequencing and flow control. Segmentation and reassembly of the protocol data units that are received and sent by devices are performed at this layer.

- FC-3 is the common services layer used for FC features to provide services such as RAID, encryption, data striping, and multicasting, and for other FC features that may be developed in the future.

- FC-4 is the upper layer protocol or the mapping layer used to describe protocols that can use FC as transport and their use sequence. It allows mapping these protocols to FC 0–3 levels and provides a communication point between upper-layer protocols (such as SCSI) and the lower FC layers.

The FC model and hardware is designed for protocol offload engines (POE). It results in low transmission overhead and improves overall efficiency. Most top SAN systems use the Fibre Channel protocol for packaging SCSI commands into FC frames and transferring traffic from hosts (servers) to shared storage.

- Performance. The biggest advantage of Fibre Channel is speed, and it can be used to build a fully-functional high-speed network. Gen 7 FC networks support 64GFC and 256GFC with 12,800 MB/s and 51,200 MB/s throughput per direction respectively. The 128G Fibre Channel provides a throughput of up to 24,850 MB/s. Dual-channel compatibility is another reason why Fibre Channel is widely used for storage interconnect in storage area networks (SAN).

- Flexibility and scalability. Simultaneous multi-access of data and connection over long distances are advantages of Fibre Channel. Special hardware and equipment are required for FC: host bus adapters installed in servers (such as ESXi hosts), FC controllers on storage servers (that are members of SAN), FC switches, cables, etc. You need to install switches if the number of ESXi hosts is greater than the number of FC ports in the storage. Such a layout is common for large server infrastructures. It is possible to use SAS disk drives in FC SAN systems.

Long-distance support allows you to locate different disks of the redundant array (mirroring) in different locations. Disk data can be mirrored to a remote site located a few kilometers away from the primary site. This approach can help you avoid data loss caused by a local disaster.

In terms of cables used, both copper and optic cables are supported, but you should use optic cables to get all the advantages of the Fibre Channel technology. The maximum distance/cable length of a copper cable is 30 meters depending on the cable quality. Optic cable – 100 meters up to 50 kilometers, depending on the cable quality. Optic cables can be single-mode or multi-mode. A single-mode fiber provides a higher transmission rate, bandwidth, and distance. Use a high-quality SFP (small form factor pluggable) transceiver to avoid performance degradation.

As for scalability, you can use Fibre Channel storage systems in environments of all sizes, from small to large. As an interconnect technology, Fibre Channel supports point-to-point connections, switched topology, and an arbitrated loop.

- Ease of use. Fibre Channel differs from well-known Ethernet networks to connect devices. Learning the technology principle and installing specific hardware requires additional effort. The difficulty level of configuring Fibre Channel SAN storage is high. You need to install specialized hardware and equipment.

- Cost. Hardware and equipment used for Fibre Channel storage systems are expensive. Such an infrastructure works best for large banks and corporations, where data transfer speed and security are very high priorities.

Fibre Channel over Ethernet (FCoE)

Fibre Channel over Ethernet (FCoE) is a technology that allows you to use underlying physical high-speed Ethernet networks (such as 10Gbit networks) with the Fibre Channel architecture at the overlay level. Encapsulation of FC frames is used by mapping them over Ethernet.

FCoE has been developed for better compatibility with hardware used for Ethernet networks, but keep in mind that the overhead is higher than for a native Fibre Channel storage network. The main idea of FCoE is to reduce cost by using the Fibre Channel technology on Ethernet networks without buying special FC equipment. Note that FCoE can be considered an extension of FC but not a replacement.

Read more in our post about network topologies and the OSI model.

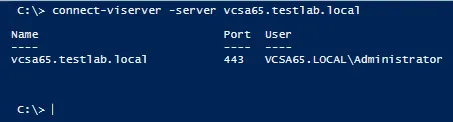

What is iSCSI?

iSCSI (Internet Small Computer Interface) is a protocol that carries SCSI commands over TCP/IP networks. The iSCSI protocol shares data at the block level, unlike SMB and NFS, which share data at the file level. This protocol allows you to use Ethernet network equipment, including network cards, switches and cables, and NAS devices or storage servers with SAS or SATA disks installed.

- Performance. Performance depends on the underlying network bandwidth but is not as good SAS and Fibre Channel performance. iSCSI supports multipath, Jumbo frames, and other technologies for better performance in Ethernet networks. You can use 10-Gbit, 40-Gbit, or even 100-Gbit/s high-speed Ethernet networks for storage connectivity. There is overhead, which impacts overall performance, when using TCP/IP networks to carry SCSI commands with the iSCSI protocol, compared to SAS and FC storage systems. Latency, which can occur when using iSCSI, can reduce the advantages of SSD storage devices on a remote storage server. The encapsulation process consumes some additional processor resources and this takes time.

- Flexibility. There is no limit to the maximum number of connected iSCSI targets using the iSCSI protocol. The maximum amount of storage you can connect using iSCSI depends on the amount of storage on disks installed in the storage server, NAS, or SAN. It is technically possible to use a server or NAS (Network Attached Storage) with SAS and even SATA disks to configure iSCSI targets.

- Ease of use. Medium – storage and IP networks knowledge is required.

- Cost. Using the iSCSI protocol to access network storage allows you to save costs and is primarily used by small and medium businesses. It is technically possible to use inexpensive hardware, but note the limitations on the level of reliability and performance that can be achieved with such hardware.

Unlike SAS and FC, the iSCSI technology does not require any specific hardware. It works within existing Ethernet network infrastructure and uses software-emulated iSCSI adapters. This makes the technology easier to scale than the previous two and more affordable for small environments with limited IT budgets, since you don’t need any additional equipment. On the other hand, iSCSI requires a dedicated server with a specific operating system (OS) and software configuration to make it work.

The table below shows the OSI layers used by iSCSI and OSI layers analogs used for the operation of Fibre Channel.

| OSI layers | iSCSI | Fibre Channel |

| 7 Application | – | – |

| 6 Presentation | SCSI command set | SCSI command set |

| 5 Session | iSCSI | FC-4/FC-3 |

| 4 Transport | TCP | FC-2 |

| 3 Network | IP | FC-2 |

| 2 Datalink | Ethernet MAC | FC-1 |

| 1 Physical | Ethernet (physical) | FC-0 |

Pro Tip: If you use Ethernet networks and FCoE or iSCSI protocols to access network storage, use dedicated networks as storage networks and not your production networks, VM networks, etc. This allows you to avoid performance degradation, improve security, and simplify diagnostics of issues.

What is vSAN?

vSAN is storage virtualization software for VMware environments and comes as part of VMware’s ESXi hypervisor for building a hyper-converged virtual infrastructure with multiple ESXi hosts. VMware first introduced its own approach to creating shared VMFS storage in vSphere v5.5, Since that time, vSAN was significantly improved in vSphere 7.0.3. VMware allows using local server resources and existing Gigabit Ethernet networking without additional storage server hardware.

This option looks attractive since it does not need any specific hardware and can be configured via the GUI in VMware vSphere Client. Moreover, it does not rely on the physical location of your hosts and storage disks.

The drawback is that creating a VMware vSAN cluster requires an additional vSphere license, which can be pricey with a large number of hosts. vSAN performance depends on the speed of the network and the disks installed in ESXi hosts.

vSAN is a good choice for infrastructures of any size and is especially handy if you are not able to install a dedicated storage server. However, it may become a costly solution for larger datacenters. Using VMware vSAN in VMware vSphere is also known as a hyper-converged infrastructure (HCI).

Conclusion

The winner in this comparison depends on your requirements. You can select the storage solution depending on performance, price, reliability and ease of use.

VMware vSphere supports FC, SAS and iSCSI storage. In addition to that, VMware provides vSAN to use direct attach storage on ESXi hosts to create storage like SAN to store VMs.

Before starting a physical-to-virtual migration project, it’s better to conduct feasibility research to determine the IOPs number for virtualized servers. Based on its results, you will decide which storage arrangement approach works best for you. Also, don’t forget to back up your vSphere environment using a reliable backup solution like NAKIVO Backup & Replication.