NTFS vs ReFS: Which One to Choose?

Hyper-V environments can’t properly function without reliable storage in place, as it has a significant impact on the virtual machine (VM) performance. This is due to the fact that the storage’s main purpose is to save and retain the available data in an appropriate format. Microsoft Hyper-V provides multiple storage options to choose from, which differ in a number of ways.

However, without a file system, it would be impossible to store, manage, and access the data kept in storage. In this blog post, we will discuss the features of Hyper-V Resilient File System (ReFS) and New Technology File System (NTFS) and what differentiates them.

What Is NTFS?

NTFS is a Microsoft file system available by default in the earlier versions of Windows and Windows Server. NTFS file system provides a number of features used for managing disk files and preventing disk failures. These include security access control (ACL), disk space utilization, improved metadata, file system journaling, encryption, sparse files and disk quotas. Moreover, Cluster Shared Volumes can be used with the Hyper-V role, which enables access to a shared disk containing an NTFS volume by multiple nodes in a failover cluster.

What Is ReFS?

ReFS, also known as Protogon, is a file system developed by Microsoft and introduced with Windows Server 2012. The idea behind ReFS was to build an advanced file to safely store larger amounts of data. For this purpose, the following features were introduced: built-in resilience, automatic integrity checking, data scrubbing, and prevention of data degradation.

Moreover, ReFS file system can seamlessly integrate with Storage Spaces, which is a storage virtualization layer used for data mirroring and striping, as well as storage pool sharing. Due to this, ReFS can detect corrupted files within a disk and automatically repair them. The idea behind ReFS was to create a file system that is resilient against data corruption and provides on-demand scalability for large environments.

ReFS and NTFS Feature Comparison

As you can see, both of the systems include various features which differ in the way they protect storage, enhance its performance and ensure the data integrity. The following section will cover what each system is capable of.

File System Reliability

If we compare ReFS vs NTFS, we should talk about reliability. In case a system failure should occur, NTFS uses checkpoints and log files to recover the system to its previous state. If a bad-sector error is detected, NTFS marks the damaged cluster as bad, discards it, and assigns a new cluster for the data storage. With the Self-healing feature of NTFS, it is possible to protect the entire file system without taking the volume offline. NTFS periodically scans the system to find any transient corruption issues and fix them. Also, NTFS provides a high level of reliability due to the use of a journaling file system, meaning that this file system tracks all changes in the disk, which allows you to quickly roll back the infrastructure to its previous state in case of a system crash or failed data migration.

On the other hand, ReFS also includes a number of features which ensure the reliability of the data storage. As mentioned above, ReFS can integrate with Storage Spaces, which enables automatic repair processes within the system without causing volume downtime. Moreover, ReFS applies a copy-on-write technique, meaning that any changes in the file are written out to a newly-allocated block on the disk. All ReFS metadata and the file data have checksums which allow you to detect any data corruption errors and support the system’s data integrity. With the feature of data scrubbing, ReFS can periodically scan the environment to verify the corresponding checksums, identify any corruptions, and repair the damaged data.

ReFS vs NTFS Performance

Both NTFS and ReFS have specific features which allow them to significantly improve the performance of the file system.

In Windows Server 2008, Transactional NTFS was introduced, which allowed it to monitor the system performance through transactions. In this case, the file operations are performed through atomic transactions, meaning that you can set up a transaction so that it will apply multiple changes to files in the system. The transaction is set up in such a way as to ensure that either all operations will succeed or none of them will. In case of system failure, the adopted changes are written to the disk and incomplete transactional work is rolled back. Thus, transactions allow you to perform operations without interruptions or errors and save the progress that has been made.

Other options which allow performance boosting include disk quotas, file compression, and resizing. With disk quotas, the administrator can allocate a certain amount of disk space for users to use and identify if the limit has been exceeded. Moreover, NTFS can compress system files with the use of compression algorithms, thus increasing the storage capacity. The feature of resizing allows you to increase or reduce the size of the NTFS volume through the use of unallocated disk space within the system.

As mentioned above, ReFS can be integrated with Windows Storage Spaces, which enables real-time tier optimization. A volume in ReFS is divided into two sectors, namely performance tier and capacity tier. Each of these tiers is assigned their own drive and resiliency types, which ensures high-level optimization. Write operations will be executed in the performance tier, and the large chunks of data stored within the performance tier will be transferred to the capacity tier in real-time.

The following features were introduced specifically to accelerate performance of Hyper-V VMs. With the sparse VDL (Valid Data Length) feature, ReFS can rapidly zero files, which allows you to create virtual hard disk (VHD) files in a matter of seconds. Another feature is block cloning, which is applied when working with dynamic workloads, such as VM cloning and checkpoint-merging operations. In this case, block cloning is performed on the basis of metadata, instead of file data. Thus, the performance of copy operations is accelerated and the disk overhead is reduced.

Scalability

Comparing ReFS vs NTFS scalability, the former can support extremely large data volumes. NTFS theoretically provides a maximum capacity of 16 exabytes, while ReFS has 262,144 exabytes. Thus, ReFS is more easily scalable than NTFS and ensures an efficient storage performance.

It is also worth noting that the maximum file name length in NTFS and ReFS is 255 characters, whereas the maximum path name length is 32,768 characters. However, ReFS provides support for longer file names and file paths by default. As for NTFS, you need to manually disable the short character limit.

Functionality comparison

Due to the fact that NTFS is a predecessor of ReFS, the latter has borrowed most of its functionality from NTFS. The table below demonstrates how the two file systems differ in their features and which of them has a more comprehensive toolset.

| Feature | NTFS | ReFS |

| BitLocker encryption | + | + |

| Data Deduplication | + | + |

| Cluster Shared Volume (CSV) support | + | + |

| Soft links | + | + |

| Failover cluster support | + | + |

| Access-control lists | + | + |

| USN journal | + | + |

| Changes notifications | + | + |

| Junction points | + | + |

| Mount points | + | + |

| Reparse points | + | + |

| Named streams | + | + |

| Volume snapshots | + | + |

| File IDs | + | + |

| Oplocks | + | + |

| Sparse files | + | + |

| Thin Provisioning | + (Storage Spaces only) | + |

| Trim/Unmap | + (Storage Spaces only) | + |

| Block clone | + | |

| Sparse VDL | + | |

| Mirror-accelerated parity | + | |

| Offloaded Data Transfer (ODX) | + | |

| File system compression | + | |

| File system encryption | + | |

| Transactions | + | |

| Hard links | + | |

| Object IDs | + | |

| Short names | + | |

| Extended attributes | + | |

| Disk quotas | + | |

| Bootable | + | |

| Page file support | + | |

| Supported on removable media | + |

Why ReFS Can’t Replace NTFS Yet

As you can see from the table above, ReFS vs NTFS question is still relevant as ReFS is still very limited in its functionality, as compared to NTFS. Crucial NTFS features such as data compression, encryption, transactions, hard links, disk quotas, and extended attributes are not present in ReFS. Another limitation is that, unlike NTFS, ReFS doesn’t provide the opportunity to boot Windows from a ReFS volume.

Its limited functionality can be explained by the reason it was built, which is to ensure protection against data corruption and provide higher scalability of the file system. However, ReFS can’t be dismissed as a less efficient file system because it has a wide number of features which can improve its performance. For example, ReFS doesn’t include the Encrypting File System (EFS) feature used in NTFS, which enables filesystem-level encryption. Instead, ReFS has the feature of BitLocker encryption, which provides full-disk encryption.

Thus, choosing between ReFS and NTFS primarily depends on the specific task for which it will be used. Currently, NTFS is a more preferable option when it comes to storing less sensitive data and having more granular control over files in the system. On the other hand, ReFS can attract users who need to manage data in large-scale environments and want to ensure the integrity of their data in case of file corruption.

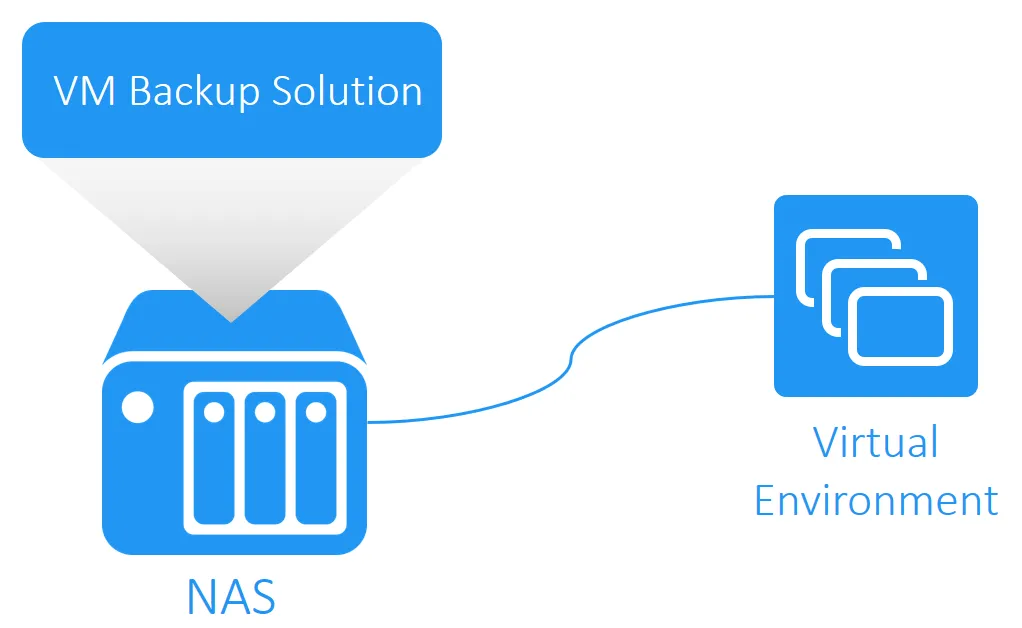

Storage Optimization Features in NAKIVO Backup & Replication

When it comes to protecting your virtual environment, installing a third-party data protection software is the ultimate solution. Thus, you can ensure that your business is always operational and ready to serve customers, even in case of an unexpected disaster. NAKIVO Backup & Replication is a reliable and cost-effective data protection solution which, in addition to the multiple benefits that it provides, also allows you to use the storage space in the most efficient way. To achieve storage optimization, NAKIVO Backup & Replication includes a number of advanced features described below.

Exclusion of swap files and partitions

A swap file on Windows OS and a swap partition on Linux OS are used to provide additional virtual memory and they basically function as an extension of physical RAM. Swap files and partitions help you increase the size of a computer’s physical memory by simulating it. The downside to this is that swap files have a tendency to grow over time which can eventually affect performance of your backup and replication jobs. To avoid the issue of over-cluttered storage, NAKIVO Backup & Replication can automatically exclude swap files or partitions from VM backups and replicas. This way you can reduce the size of your storage space and increase the speed of your backup and replication jobs.

Deduplication and compression

NAKIVO Backup & Replication applies deduplication and compression techniques to optimize the way that storage space is used by backups. Deduplication ensures that all duplicate copies of repeating data blocks are deleted from VM backups. This way only unique data blocks are saved to the backup repository. Moreover, NAKIVO Backup & Replication can compress each data block, thus reducing the data size. Both deduplication and data compression are enabled by default and run automatically.

Incremental jobs

Backup and replication jobs in NAKIVO Backup & Replication are incremental, meaning that after the initial full backup or replication job, all the following job runs transfer only the changed data blocks. For this purpose, VMware Changed Block Tracking (CBT) or Hyper-V Resilient Change Tracking (RCT) are used, which allow you to identify which data has changed since the last backup or replication. Running incremental jobs reduces the time spent on backup and replication jobs and decreases the network load.

Full synthetic data storage

NAKIVO Backup & Replication applies the synthetic approach to creating and storing VM backups. Due to the use of the synthetic mode, the product can identify the changed data blocks within a VM and then send them to a backup repository. In the end of a backup job run, NAKIVO Backup & Replication can create a recovery point, which is used as a reference to specific data blocks needed for restoring the VM at a particular moment in time. Synthetic backups ensure that your backups are fast and safe, the data storage space is efficiently used, and the VM at a particular moment in time can be rapidly recovered.

Deduplication appliances support

The data deduplication appliance is a special type of hardware which eliminates the duplicate copies of repeated data. NAKIVO Backup & Replication provides support for various deduplication appliances, such as NEC HYDRAstor, Data Domain, Quantum DXi, HP StoreOnce, etc. Any of those deduplication appliances can be used as a primary or a secondary backup target. Unlike the forever-incremental backup, which stores only the incremental data blocks, the new backup repository type stores VM backup chains, which include periodic full backups and incremental backup files tracked between these full backups.

The support for deduplication appliances enables you to back up VMs 53 times faster than with the traditional backup repository. Moreover, the storage space and backup operations are more easily managed with the deduplication appliance in place.

Conclusion

Microsoft Hyper-V is efficient virtualization software which constantly works on improving its functionality. Due to the fact that data storage requirements have drastically changed with time, cutting-edge ReFS has been introduced as a file system capable of overcoming the issues present in NTFS.

Compared to NTFS, the main purpose of ReFS is to improve resilience of the system to data corruption and ensure large scalability. However, ReFS is still a relatively new file system and its functionality isn’t as developed as that of NTFS. Thus, when choosing between the two options, consider the scale of your business operations, needs of your virtual environment, and sensitivity of your data.

To ensure that your virtual environment is securely protected, install NAKIVO Backup & Replication which includes a set of advanced features for improving VM backup and replication jobs and refining DR strategies. With NAKIVO Backup & Replication, you can not only save time and money, but also reduce your storage space requirements.