Understanding Virtual Volumes (vVols) in VMware vSphere 6.7 and 7.0

Storage is a crucial component of each computer, server, and datacenter, including virtual environments. Proper storage configuration ensures optimal performance and reliability. When a data center grows and operates with large amounts of data, automation and scalability become a necessity. VMware offers the vVols feature, which changes the traditional concept of storing data on shared storage. This blog post covers VMware vVols (VMware Virtual Volumes), including use cases, and this feature’s working principle.

Backstory

VMware vSphere is used to virtualize IT infrastructures and build completely software-defined virtual datacenters, where an administrator can configure all components in VMware vSphere abstracting from the hardware.

When VMware released the first versions of ESX and VMware Workstation, these hypervisors used software emulation to run virtual machines. As virtualization grew in popularity, VMware convinced partners and other vendors that virtualization is essential in the modern world. As a result, virtualization features were implemented into Intel and AMD processors as Intel VT-x and AMD-V instruction sets. Compared with virtualization by hypervisor software only, hardware virtualization supported on the CPU level improved virtual machine performance. While ESXi clusters allow you to abstract from a CPU perspective, ESXi clusters also allow you to abstract from underlying ESXi hosts running VMs.

The popularity of virtual machines continued to grow. The next step was network virtualization since using traditional hardware network devices in complex virtual environments with a large number of VMs was inconvenient. Now we can use VMware virtual switches (a standard vSwitch and distributed vSwitch operating on the VMKernel level) to connect VM virtual network adapters to different networks and devices. VMware also developed NSX, a dedicated software-defined network platform that can be integrated with VMware vSphere. Then vendors decided that a virtual switch can be implemented on the hardware level in network adapters, and the SR-IOV technology was born. When VLAN capabilities to isolate networks were no longer enough, especially for infrastructure-as-a-service (IaaS) providers, vendors developed the VXLAN technology that requires hardware support. The advantage of network virtualization is that fewer physical cable connections are used between network devices, and the network is centralized.

The next stage is the virtualization of storage. VMware developed VMware vSAN that allows you to build a hyper-converged virtual infrastructure using software-defined storage and abstract physical disk drives. VMware didn’t stop at that and developed vVols to take software-defined storage to the next level. The VMware vVols feature was introduced with VMware vSphere 6.0.

Reasons to Use vVol

When traditional storage arrays were created years ago, storage vendors and software vendors decided to use LUN (Logical Unit Number) to map the LUNs, just as you would map a disk partition of a hard disk drive. The initial idea was that an operating system usually uses LUN as a single disk or as a shared disk for a cluster. When VMware developed its own VMware vSphere product, the company adopted LUN to connect shared storage via a network to ESXi hosts and format a LUN using VMFS, a cluster file system developed by VMware. VMFS is a reliable file system respected by users. Traditionally, a system administrator (a storage administrator) creates a LUN on the needed storage array, mounts this LUN to an ESXi host, and creates a datastore using the attached LUN by creating the VMFS file system.

Different storage types

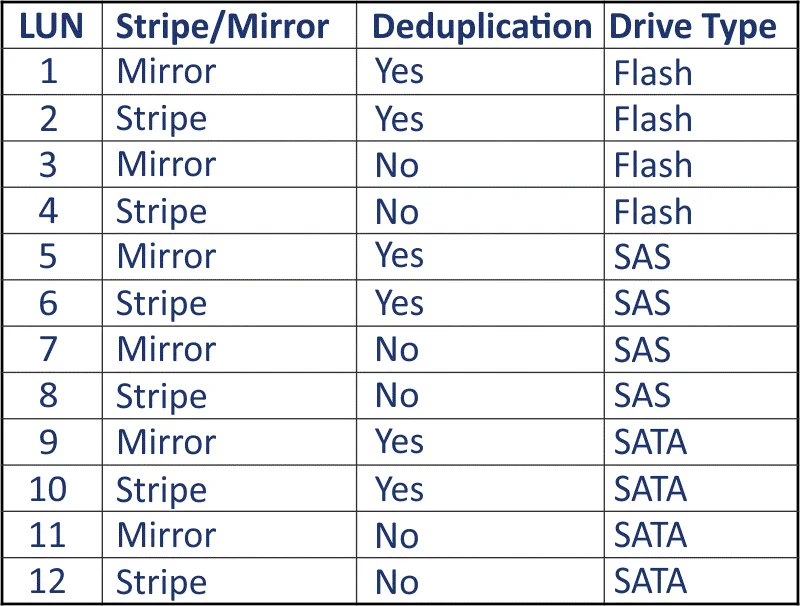

When a virtual infrastructure grows, and there are different types of disks, RAID, and deduplication configuration on storage arrays, storage provisioning may be required more frequently. Different virtual machines require different levels of service according to different policies. Imagine that disk arrays on your storage array devices have the following characteristics: redundancy (RAID 1/RAID 0), interface/technology of disks (Flash/SSD, SAS HDD, SATA HDD), deduplication (enabled/disabled). In this case, we have 12 possible combinations of storage configurations.

When using the traditional approach, we should create a LUN/datastore for each storage type to satisfy possible capabilities. However, one storage type may be more frequently used to provision VM storage than another. It is difficult to remember which VM must use this or that storage type in a large virtual infrastructure when you have hundreds or thousands of VMs. Moreover, when the fastest and most reliable storage is full, there may be issues because normally, everybody wants to store a VM on the best storage. Human error is also a potential issue. Storage vMotion and storage DRS should be used then to migrate VM files to free datastores of available storage classes. VMware vVols can make life easier in this case.

VMware vVols and Storage Policies

VMware vVols is an innovative technology that delegates some storage-specific operations to the storage-hardware side, such as SAN (Storage Area Network) and NAS (Network Attached Storage). In this case, vendors of storage array systems must implement support of vStorage API for Array Integration (VAAI) for their storage devices. Using VAAI considerably offloads storage-related tasks from ESXi servers to the storage arrays hardware and increases performance.

A system administrator should define a set of policies to be used for different VMs when creating the VMs depending on requirements. For example, you can define different policies and set the names for them such as Top class, Medium class, and Low class:

Top class – Flash/SSD, RAID1, Deduplication enabled

Medium class – SAS HDD, RAID1, Deduplication enabled

Low class – SATA HDD, RAID0, Deduplication disabled.

You can also create more policies to fit all possible combinations (12 combinations in our example) if you have the appropriate storage hardware configuration.

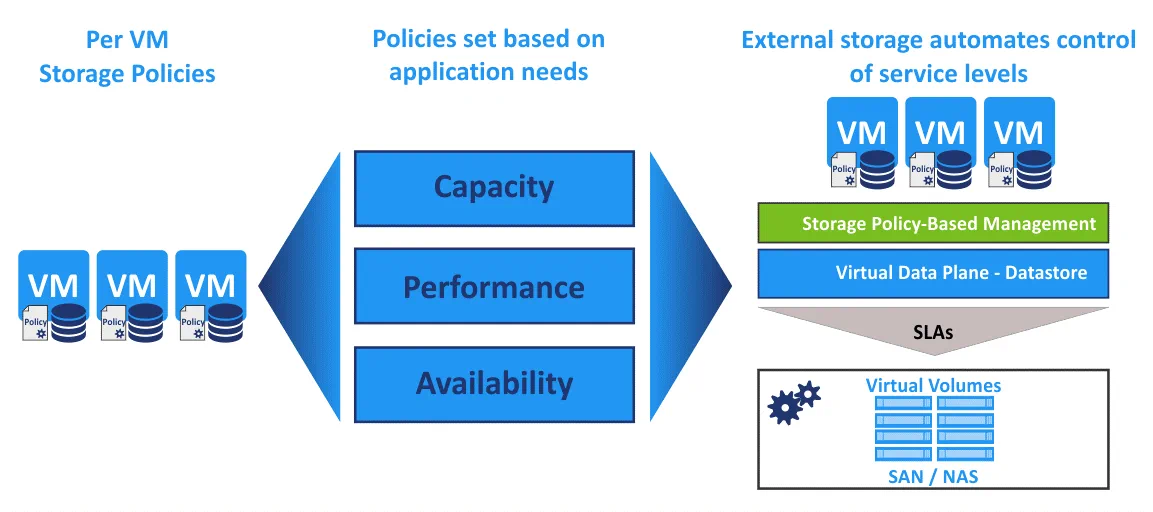

When creating a new VM, the administrator should select a policy for VM storage. A policy is applied for VM objects using vVols on a per-VM basis. Then, a LUN is created on the appropriate storage array for each VM object, such as a virtual disk, swap file, configuration file, virtual memory file, or other specific VM files. Storage features and configuration are abstracted from the storage consumer.

Policy-based management saves time for vSphere administrators in large virtual environments because admins don’t have to search manually for a suitable storage target for a VM among available storage arrays with free space. VMware vVol storage policies are managed on a level of a VM virtual disk.

LUNs and Replication

A VMFS datastore is created on a single LUN. Usually, there are hundreds of VMFS datastores created over provisioned LUNs in large virtual infrastructures. Storage hardware is not aware of the virtual machine files stored on those LUNs. If you use storage-based synchronous replication on the hardware level of storage array systems, storage hardware performs data replication of the entire LUN with all VMs stored on this LUN, and, in this case, you cannot replicate only one VM.

Read about the advantages of VM backup and replication on the host level.

Creating one LUN per VM is a bad idea as it complicates management of VMFS volumes. VMware Virtual Volumes (vVols) are intended to resolve these issues, make the management of virtual machine storage more granular, and avoid using the LUN > VMFS volume > Datastore scheme to provision storage for a VM.

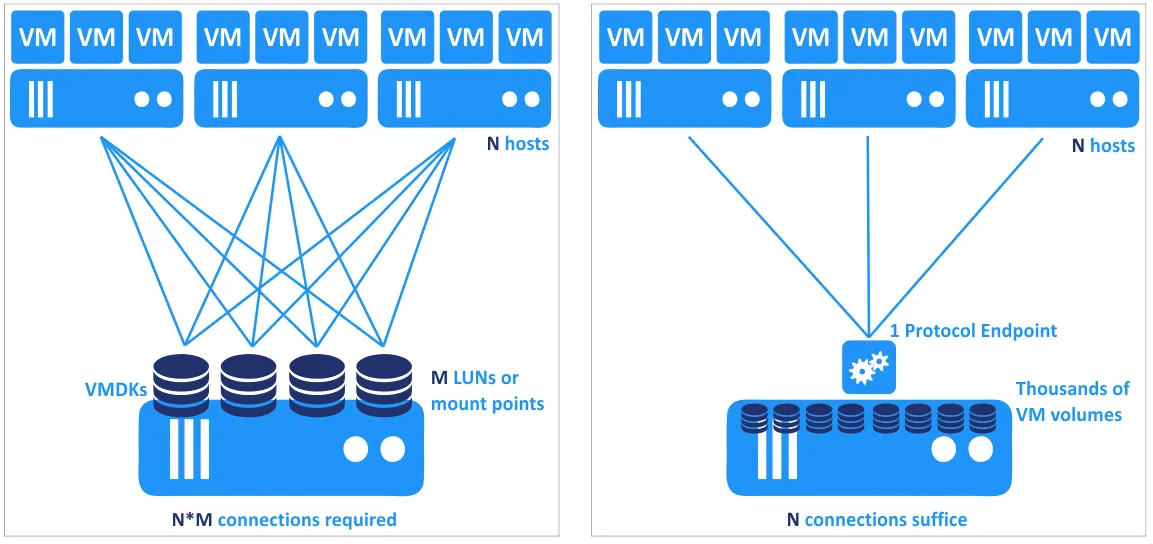

Connections and Loads

If you use N ESXi hosts and M LUNs on a storage array, then NxM network connections are used for communication between VMs and datastores when using traditional storage provisioning for VMs. If there are many thin disks (Thin Provisioned disks) on datastores, for example, in virtual desktop infrastructures (VDI), the storage hardware load increases. Expanding a thin virtual disk and adding new blocks on this disk affects the performance of virtual disks of other VMs located on the same datastore. This is another issue that can be resolved by using vVols.

More details are explained below in the section about protocol endpoint.

Advantages of Using vVols

Here’s a list of some of the advantages of using VMware vVols:

- Simplified storage management for large virtual environments

- Flexible and granular storage management on the VM level

- Optimal resource utilization, dynamic adjustment in real-time

- Avoidance of overprovisioning

- Policy-based automated and centralized storage provisioning

- No need for pre-configured LUNs on the storage side

- Offload operations such as snapshotting, cloning, data encryption, Storage DRS from ESXi hosts to storage hardware

- Ability to better meet requirements for VM storage

- Device-centric management is changed to VM-centric

The Working Principle

The concept of VMware vVols is that a VM and virtual disks are basic units of storage management, unlike LUNs that are the entire datastores where files of multiple VMs are stored. Organizing data using VMware vVols is convenient for storage arrays in large data centers with many VMs.

VMware vVols is the low-level storage for virtual machines that supports operations on the storage array level, similarly to traditional LUNs used to create datastores. Instead of using the traditional VMFS file system, a storage array defines how to provide access and organize data for VMs using the storage array.

Another feature of vVols is that there is no file system for VM disks, unlike VMFS datastores on LUNs where VMDK virtual disk files are stored. A file system is created in a guest operating system as if you are attaching a new empty hard disk to be used by the operating system.

The VMFS file system is created on VM configuration vVols, where VMX files are stored. ATS (Atomic Test & Set) operations are supported in this case. ATS is a set of commands to manage file system lock on storage array devices.

New VMware virtual volumes (vVols) are automatically created on a storage array when you create a new VM, create a VM snapshot, clone a VM, or replicate a VM.

Multiple vVols are created when you create a new VM:

- 1 configuration vVol that is used to store a VMX configuration file of a VM

- 1 vVol is created for each virtual disk

- 1 vVol is created for a swap file (partition) if required

- 1 vVol is created for each disk snapshot and memory snapshot

You can consider VMware vVols as encapsulations of virtual disks and the other VM files mentioned above.

ESXi supports connection up to 256 LUNs. For VMware vVols to pass this limit, a special logical device called the IO Demultiplexer or IO Demux must be used in the storage array. The device is a logical input/output channel providing access for all VMs that must access their virtual disks on a storage array. With VMware vVols, you abstract from block-based sharing protocols such as iSCSI or FC (fibre channel) and file-based protocols such as NFS. The unified architecture of storage for all supported protocols is provided.

Let’s explore other features of vVols.

- Free space becomes available automatically after deleting files on VMs without the need to run the UNMAP command (Thin Provisioning Block Space Reclamation).

- Capacity Pools are created on SAN to define the level of accessible storage capacity in pools and the policies restricting low-level operations in the pool.

- Profiles for QoS (Quality of Service) are created to satisfy VMs storage performance requirements. Set performance parameters and data policies to be applied on the vVol level or the Capacity Pool level.

vVols is a successor of VAAI. If the operation of vVols is not possible, the system is switched to use VAAI primitives. VAAI (vStorage API for Array Integration) is intended to offload some storage operations from ESXi hosts to the storage array systems.

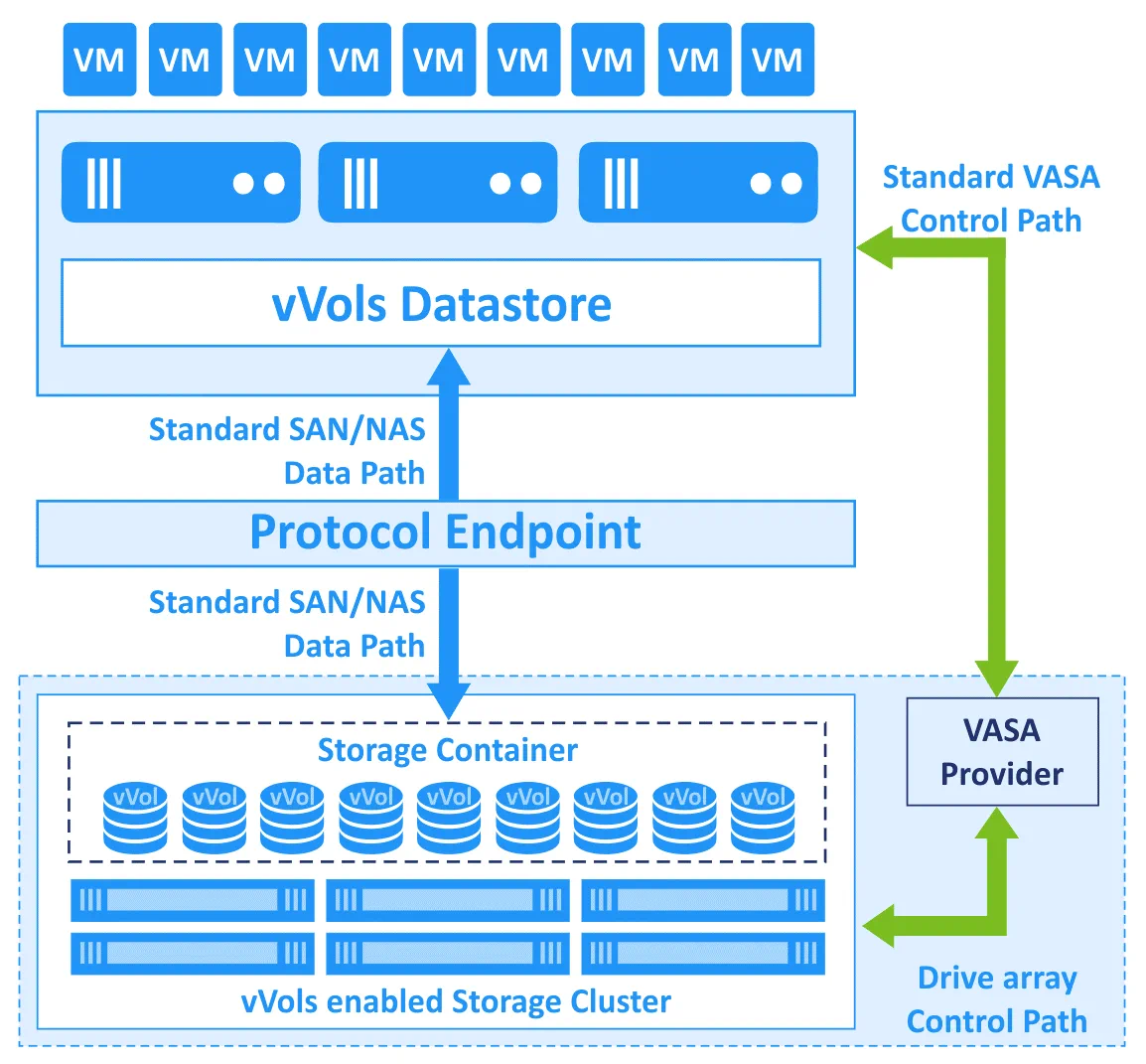

Storage Provider (VASA provider) is a software layer provided by storage array hardware manufacturers to ensure the interaction of vSphere components with storage hardware that contains VMware vVols as Storage Containers. A Storage Provider can be implemented as a storage array (hardware) component and as a virtual appliance (a VM running on an ESXi host in vSphere). ESXi hosts and vCenter connect to a Storage Provider.

VASA (vSphere API for Storage Awareness) is a software component used to ensure storage awareness in VMware vSphere. VASA is an API (application programming interface) used to integrate storage arrays with vCenter and permit management from vCenter.

Storage Container is a logical entity to group VMware vVols by types to perform administration. A storage container is created on storage array hardware. The container can be resized. One storage container can be accessed via multiple protocol endpoints simultaneously.

Protocol Endpoint is a logical I/O device (proxy) used for interaction of VMs running on ESXi hosts with VMware virtual volumes (see the image with NxM connections and loads above). All VMs are connected to the protocol endpoint to access their virtual volumes. When using vVols, M connections are established instead of MxN connections while using traditional VMDK disks on VMFS datastores. ESXi hosts don’t have direct access to vVols on the storage side.

The queue depth for protocol endpoints is 128, which is higher than that for LUN (32). An unlimited number of protocol endpoints can be created, but there is no need to do so because one PE can serve thousands of VMware vVols. Virtual machine vVols can be linked to the protocol endpoint in VMware vCenter. iSCSI, NFS v3, FC, and FCoE are the supported protocols that are the industry standard for SAN and NAS devices. The endpoint receives SCSI or NFS commands. The protocol endpoint can be considered as an analog of the mount point in Linux.

Requirements and Limitations

Before starting to use VMware vVols, you should read system requirements and limitations.

- VMware virtual volumes are available in all editions of VMware vSphere since vSphere 6.0 (for VMware vSphere 7.0 use Enterprise Plus and Standard editions). VMware ESXi 6.0 and vCenter 6.0 or higher are required. As VMware vVols require vCenter, vVols cannot be used on a standalone ESXi host. Raw Device Mapping (RDM) is not supported.

- Server hardware must support vSphere 6.0 or higher. Check the VMware Compatibility Guide.

- A storage array system must support VMware vVols via VASA (VMware APIs for Storage Awareness). A storage array may have a vendor-specific implementation of vVols requiring an upgrade of firmware on the storage array system.

- Time must be synchronized on ESXi hosts and all storage components. Using NTP (Network Time Protocol) for time synchronization is recommended.

The Workflow (Overview)

Let’s overview the main steps for configuring VMware vVols. A system administrator should follow the workflow as explained below to use VMware vSphere virtual volumes.

Synchronize time on all devices (ESXi hosts, vCenter Servers, storage array systems).

Register a storage provider (VASA Provider) in vCenter by using VMware vSphere Client. You can use a vSphere Web Client plug-in or the storage system user interface to register a storage provider. Once the storage provider is registered, a secure SSL connection is established between vCenter and the storage provider.

Create a virtual datastore for VMware vVols. A vVol datastore is created on a storage container connected to a storage provider. Once a datastore is created, you cannot extend and upgrade a vVol datastore because a storage array system fully controls the vVol datastore. After creating a vVol datastore, mount the vVol datastore to additional ESXi hosts.

Map storage capabilities to VM storage policies (storage capabilities must be configured on a storage system before configuring VM storage policies). Create storage policies in vCenter to meet storage requirements for VMs, and provide the appropriate level of service, including storage performance, redundancy, etc.

You should choose a storage provider (for example, NetApp, Dell EMC, or HP 3PAR with support of vVols) and select the needed features for a policy.

Create a virtual machine, and, at the next storage configuration step, select a VM storage policy. A suitable storage array is chosen automatically, and vVols are created to store VM data.

You can migrate virtual machine disks from VMFS, NFS, and vSAN datastores to vVols datastores with Storage vMotion. You can manage VMware vVols in vSphere CLI.

Before switching to using vVols instead of traditional vMFS datastores, make sure that your backup software supports VMware vVols and can back up virtual machines stored on vVols. VMware Virtual Volumes (vVols) supports backup software that uses vSphere APIs for Data Protection (VADP). NAKIVO Backup & Replication supports VMware vVols to perform VMware data backup. If you deploy a VASA provider as a virtual appliance (that is, a VMware VM on an ESXi host), don’t forget to back up the VASA provider. Without the VASA provider, the vVols structure can be lost. In most cases, you can use disaster recovery or high availability features to run a VASA provider on the vApps of storage vendors.

Download the Free Edition of NAKIVO Backup & Replication to have full access to the features and functionality of the Pro Edition, including VMware backup and recovery. The Free Edition of NAKIVO Backup & Replication includes licenses for 10 machines and is absolutely free for one year so don’t miss out on this unique opportunity.

Conclusion

VMware Virtual Volumes (vVols) is a feature that provides a new approach to provisioning storage resources for virtual machines. This approach offers more granularity and a higher automation level, which are useful for large and extra-large virtual environments with thousands of virtual machines. The concept of vVols is that the basic management unit is a VM, not a datastore or LUN.

Creating policies that define the characteristics of storage arrays and selecting the needed policy to provision the right type of storage for a VM allows administrators to avoid unintentional errors and use hardware resources more rationally. With VMware APIs (VASA, VAAI), storage operations like snapshots, replication, cloning, and encryption are offloaded from ESXi servers to storage array systems; however, storage management for VMs is performed in vCenter.

Protocol endpoints provide the unified interface that helps reduce the number of connections and eliminates the need to select between block-based and file-based shared storage when you provision storage to store VM files. VMware vVols takes you one step closer to building a software-defined data center.