What Is VMFS? VMware File System Overview

VMware vSphere is the most commonly deployed virtualization platform for data centers. It provides a wide range of enterprise features to run virtual machines (VMs). To provide reliable, effective storage that is compatible with vSphere features, VMware created its own file system called VMFS.

This blog post covers VMware VMFS features, how they work with other vSphere features, and the advantages of VMFS for storing VM files and running VMs.

What Is VMFS?

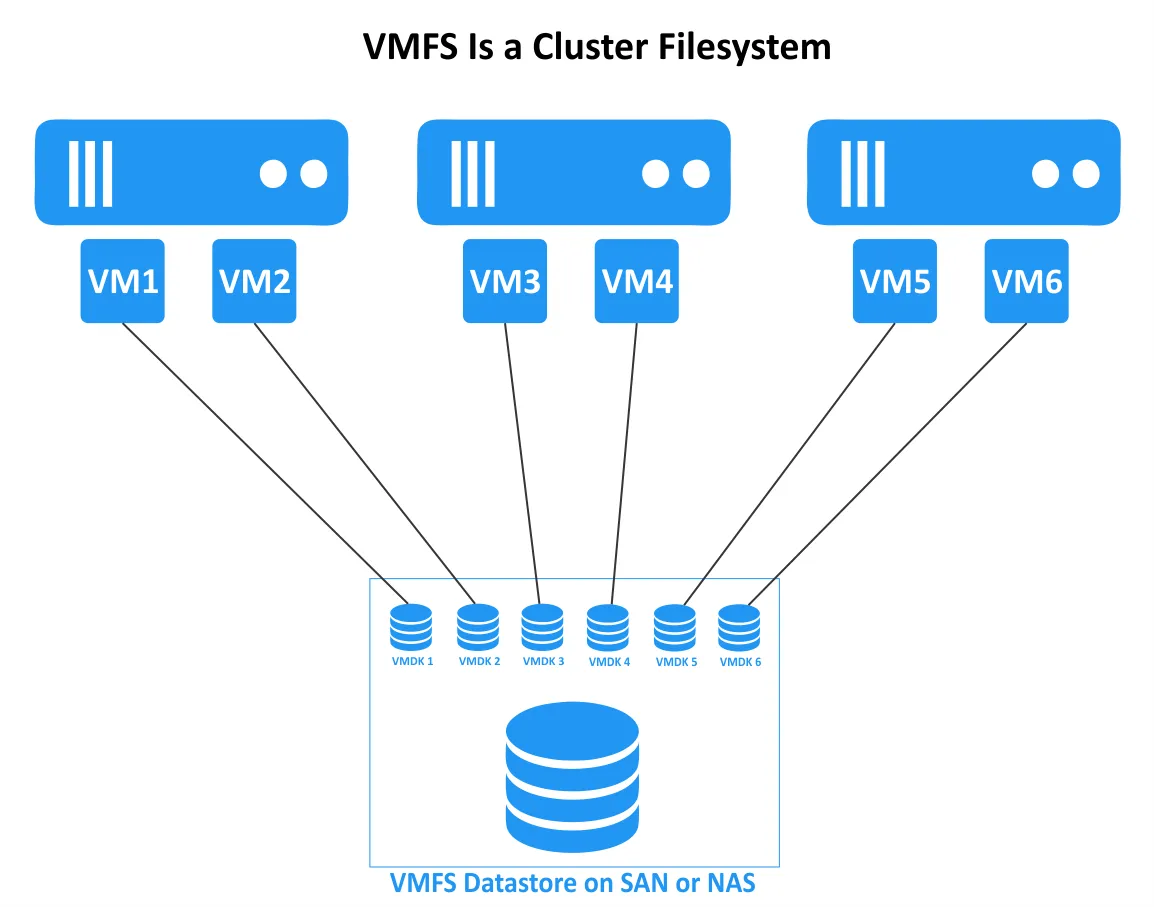

Virtual Machine File System (VMFS) is a cluster file system that is optimized for storing virtual machine files, including virtual disks in VMware vSphere. It was created to make storage virtualization for VMs more efficient. VMFS is a high-performance reliable proprietary file system, designed to run virtual machines (VMs) in a scalable environment – from small to large and extra-large data centers. VMware vSphere VMFS functions as a volume manager and allows you to store VM files in logical containers called VMFS datastores.

The VMFS file system can be created on SCSI-based disks (directly attached SCSI and SAS disks) and on block storage accessed via iSCSI, Fibre Channel (FC), and Fibre Channel over Ethernet (FCoE). VMFS operates on disks attached to ESXi servers but not on computers running VMware Workstation or VMware Player.

VMFS Versions

VMware VMFS has evolved significantly since the release of version one. Here is a short overview of VMFS versions to track the main changes and features.

- VMFS 1 was used for ESX Server 1.x. This version of VMware VMFS didn’t support clustering features and was used only on one server at a time. Concurrent access by multiple servers was not supported.

- VMFS 2 was used on ESX Server 2.x and sometimes on ESX 3.x. VMFS 2 didn’t have a directory structure.

- VMFS 3 was used on ESXi Server 3.x and ESXi Server 4.x in vSphere. Support for directory structure was added in this version. The maximum file system size is 50 TB. The maximum logical unit number (LUN) size is 2 TB. ESXi 7.0 doesn’t support VMFS 3.

- VMFS 5 is used starting from VMware vSphere 5.x. The volume (file system) size was increased to 64 TB, and the maximum VMDK file size was increased to 62 for VMFS 5. However, ESXi 5.5 supports a maximum of 2 TB for the size of VMDK virtual disks. Support of the GPT partition layout was added. Both GPT and MBR are supported (previous VMFS versions support only MBR).

- VMFS 6 was released in vSphere 6.5 and is used in vSphere 6.7, vSphere 7.0, and newer versions such as vSphere 8.

VMFS Features

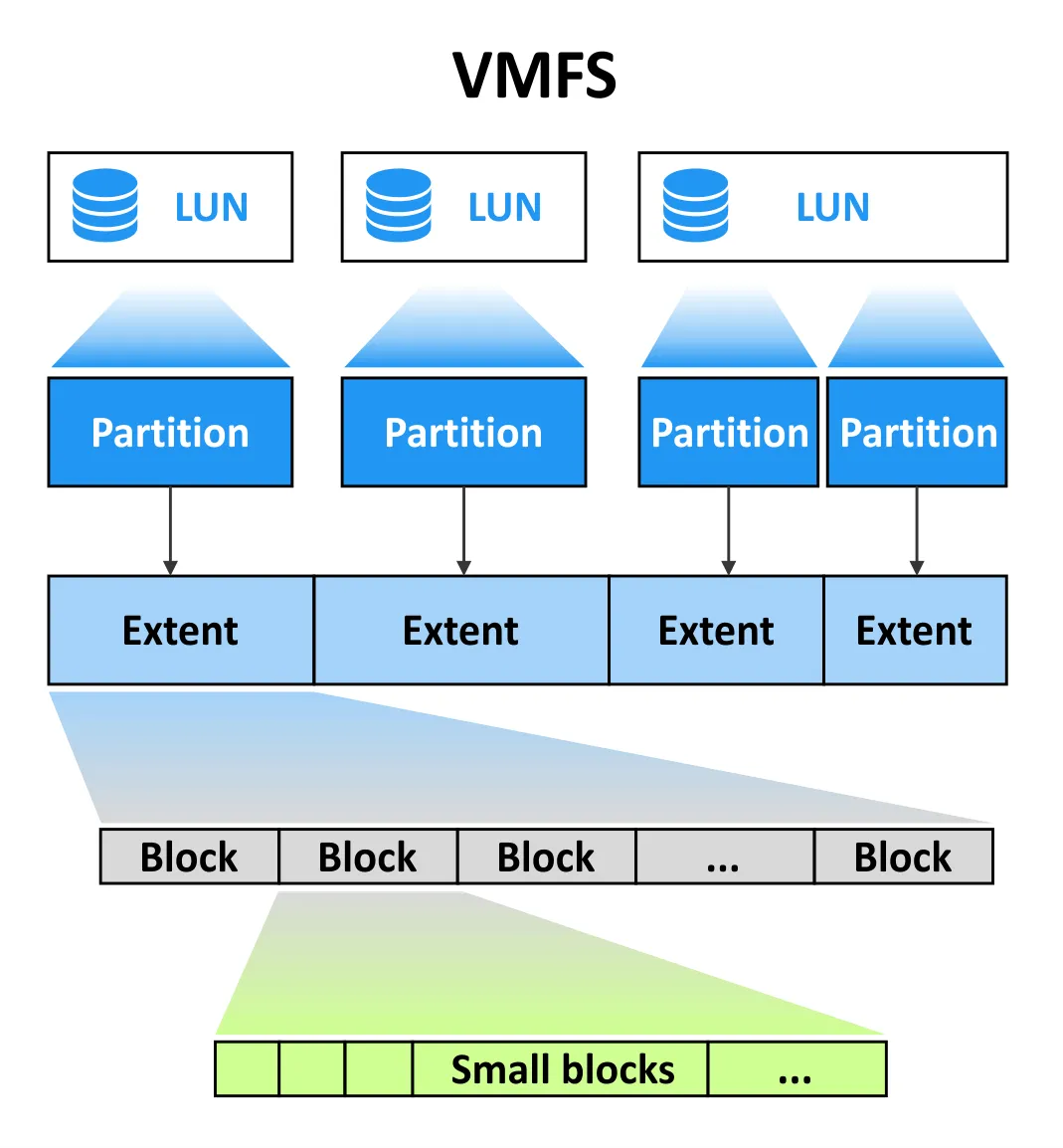

VMware VMFS is optimized for storing big files because VMDK virtual disks typically consume a large amount of storage space. A VMFS datastore is a logical container using the VMFS file system to store files on a block-based storage device or LUN. A datastore runs on top of a volume. A VMFS volume can be created by using one or multiple extents. Extents rely on the underlying partitions.

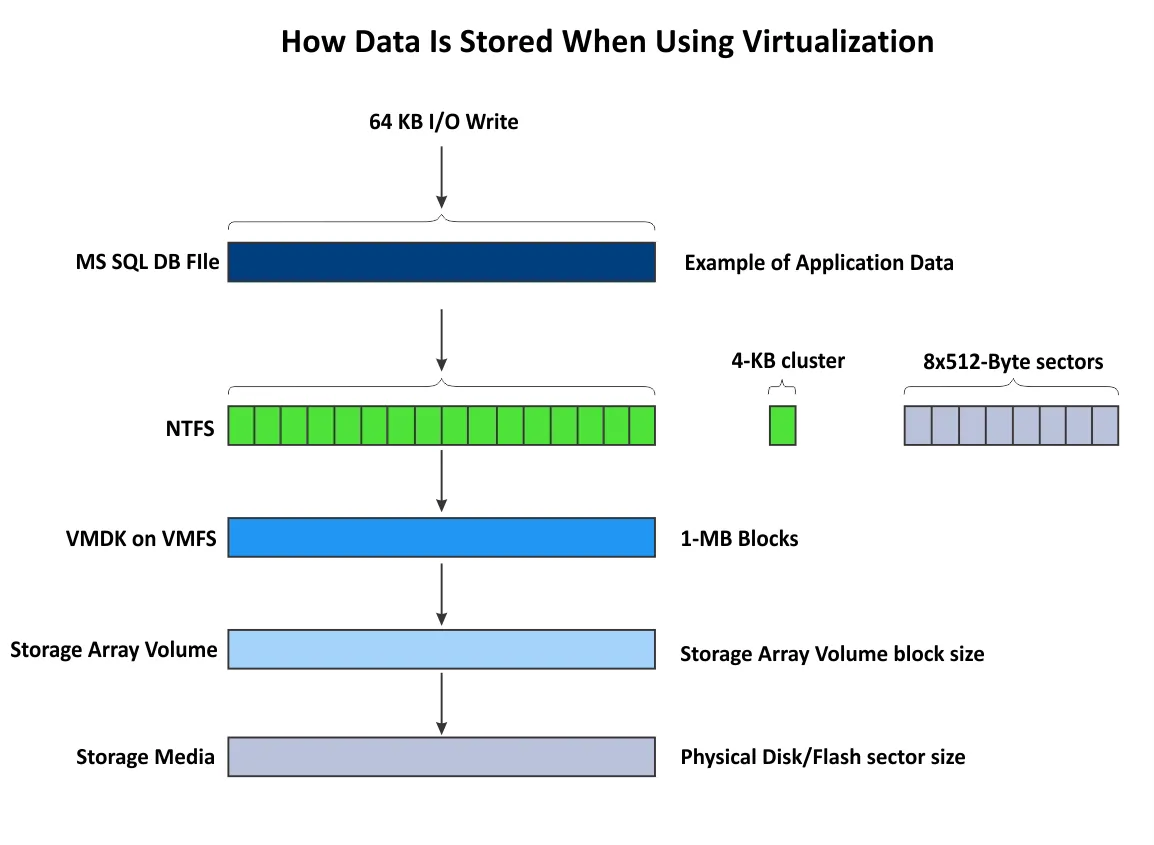

VMware VMFS block size

VMFS 5 and VMFS 6 use a 1-MB block size. The block size has an impact on the maximum file size and defines how much space the file occupies. You cannot change the block size for VMFS 5 and VMFS 6.

VMware utilizes sub-block allocation for small directories and files with VMFS 6 and VMFS 5. Sub-blocks help save storage space when files smaller than 1 MB are stored so that they don’t occupy the entire 1-MB block. The size of a sub-block is 64 KB for VMFS 6 and 8 KB for VMFS 5.

VMFS 6 introduces a new concept for using small file blocks and large file blocks. Don’t confuse small file blocks with the default 1-MB blocks. The size of small file blocks (SFB) in VMFS 6 is 1 MB. VMFS 6 can also use large file blocks (LFB), which are 512 MB in size, to improve performance when creating large files. LFBs are primarily used to create thick provisioned disks and swap files. The portions of a provisioned disk that don’t fit LFBs, are located on SFBs. SFBs are used for thin provisioned disks.

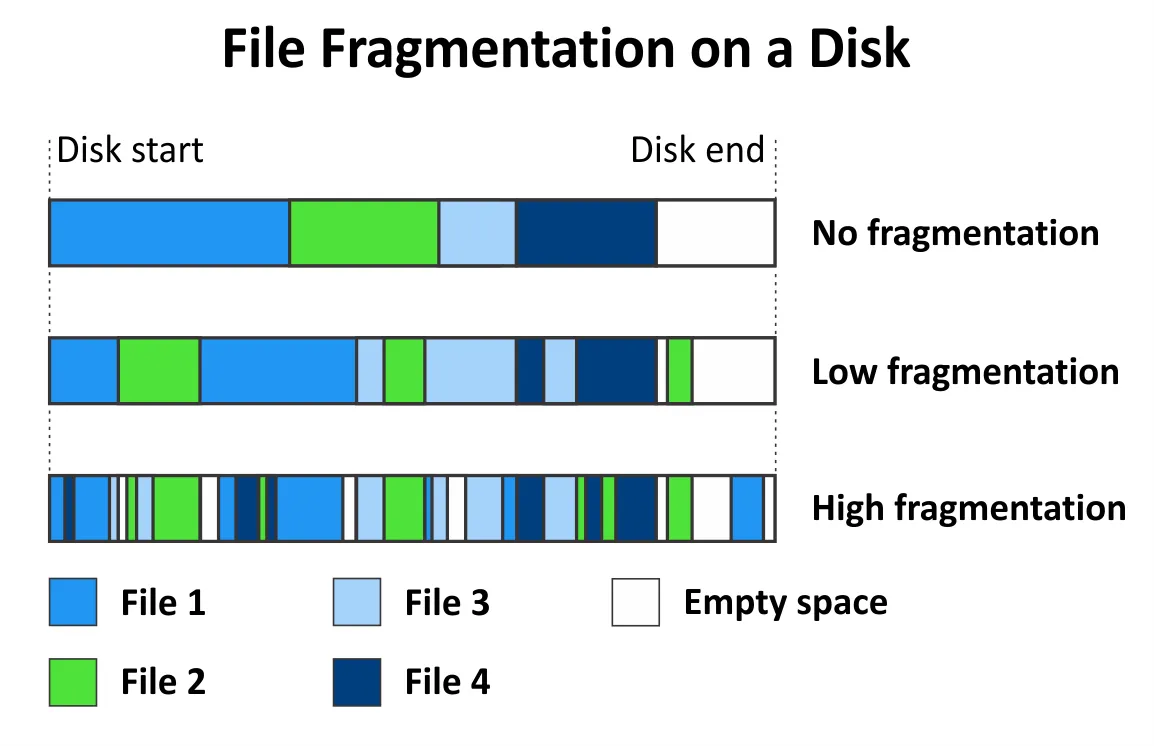

File fragmentation

Fragmentation is when the blocks of one file are scattered across the volume, and there are gaps between them. The gaps can be empty or occupied by blocks that belong to other files. Fragmented files slow read and write disk performance. Restoring performance requires defragmentation, which is the process of reorganizing pieces of data stored on a disk to locate them together (put the blocks used by a file continuously one after another). This allows the heads of an HDD to read and write the blocks without extra head movements.

VMware VMFS is not prone to significant file fragmentation. Fragmentation is not relevant to the performance of VMFS because large blocks are used. The VMware VMFS block size is 1 MB, as mentioned above. For example, Windows uses 4-KB blocks for the NTFS file system, which should be defragmented periodically when located on hard disk drives. Most of the files stored on a VMFS volume, though, are large files – virtual disk files, swap files, installation image files. If there is a gap between files, the gap is also large, and when a hard disk drive seeks multiple blocks used to store a file, this impact is negligible. In fact, a VMFS volume cannot be defragmented and there is no need for that.

Don’t run defragmentation in a guest operating system (OS) for disks used by the guest OS. Defragmentation from a guest OS doesn’t help. This is because storage performance for a VM depends on the input/output (I/O) intensity on the physical storage array where multiple VMs (including virtual disks that are VMDK files) are stored and can utilize this storage array with different I/O loads. Moreover, if you start to defragment partitions located on thin provisioned disks from a guest OS, blocks are moved around, storage I/O load increases, and the size of these thin disks increases.

Defragmentation for linked clone VMs and VMs that have snapshots leads to an increase of redo logs, which occupy more storage space as a result. If you back up VMware VMs with a solution that relies on Changed Block Tracking, defragmentation increases the number of changed blocks as well, and backup time increases because more data needs to be backed up. Defragmentation from a guest OS has a negative impact when running Storage vMotion to move a VM between datastores.

Datastore extents

A VMFS volume resides on one or more extents. Each extent occupies a partition, and the partition in turn is located on the underlying LUN. Extents provide additional scalability for VMFS volumes. When you create a VMFS volume, you use at least one extent. You can add more extents to an existing VMFS volume to expand the volume. Extents are different from RAID 0 striping.

- If you detect that one of the attached extents has gone offline, you can identify which extent of a volume is offline. Just enter the following command:

vmkfstools -Ph /vmfs/volumes/iscsi_datastore/The result displays the SCSI identifier (NAA id) of the problematic LUN.

- If one of the extents fails, the VMFS volume can remain online. But if a virtual disk of a VM has at least one block on the failed extent, the VM virtual disk becomes inaccessible.

- If the first extent used by a VMFS volume goes offline, the entire VMFS datastore becomes inactive because address resolution resources are located on the first extent. Therefore, use VMFS extents to create and increase VMFS volumes if there is no other solution to increase a volume.

Regularly back up VMware vSphere to protect VM data and avoid possible issues caused by VMFS volumes with multiple extents storing VM files.

Journal logging

VMFS uses an on-disk distributed journal to update metadata on a file system. After creating a VMFS file system, VMware VMFS allocates storage space to store journal data. Journaling is used to track changes that have not been committed yet to the file system.

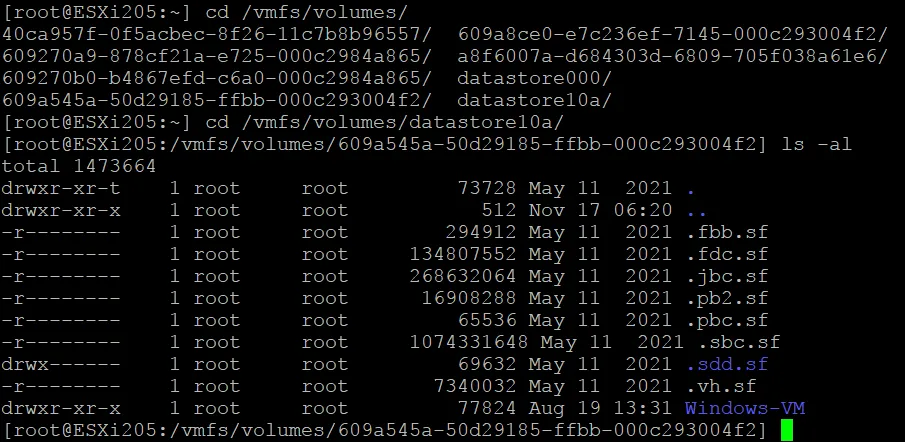

Journaling changes written to file system metadata makes it more likely that you can recover the latest version of a file in case of an unexpected shutdown or crash. Journal logging helps replay changes made since the last successful commit to reconstruct VMFS file system data. A journaling file system doesn’t require running the full file system check after a failure to check data consistency as you can check the journal. There are .sf files in the root of a VMFS volume to store VMFS file system metadata. Each ESXi host connected to the VMFS datastore can access this metadata to get the status of each object on the datastore.

VMFS metadata contains file system descriptors: block size, volume capacity, number of extents, volume label, VMFS version, and VMFS UUID. VMFS metadata can be helpful for VMFS recovery.

Directory structure

When a VM is created, all VM files, including VMDK virtual disk files, are located in a single directory on a datastore. The directory name is identical to the VM name. If you need to store a particular VMDK file in another location (for example, on another VMFS datastore), you can copy a VMDK file manually and open the virtual disk in VM settings to attach the disk. A structured architecture simplifies backup and disaster recovery because the content of a directory should be copied for VM backup to enable recovery if you lose data on the original VM.

Thin provisioning

Thin provisioning is a VMFS feature that optimizes storage utilization and helps save storage space. You can set thin provisioning at the virtual disk level (for a particular virtual disk of a VM). The size of a thin provisioning virtual disk grows dynamically when data is written to the thin provisioned virtual disk. Using as much storage space as the disk needs at any moment in time is the advantage of thin disks.

For example, you create a thin provisioned virtual disk whose size is 50 GB, but only 10 GB of storage space is used on this virtual disk. The size of a virtual disk file (*-flat.vmdk) is 10 GB in this case. The guest OS detects that the maximum size of the disk is 50 GB and displays the used space as 10 GB.

You can ensure that thin provisioning relies on the VMFS file system if you try to copy a thin provisioned virtual disk (.vmdk and -flat.vmdk virtual disk files) to your local disk formatted with NTFS or ext4 file system. After copying the virtual disk, the virtual disk size is equal to the maximum provisioned disk size (not the actual size of the thin provisioned disk on the VMFS datastore).

Note: VMware vSphere also supports creating datastores, including shared datastores on the NFS file system, with support for thin provisioning.

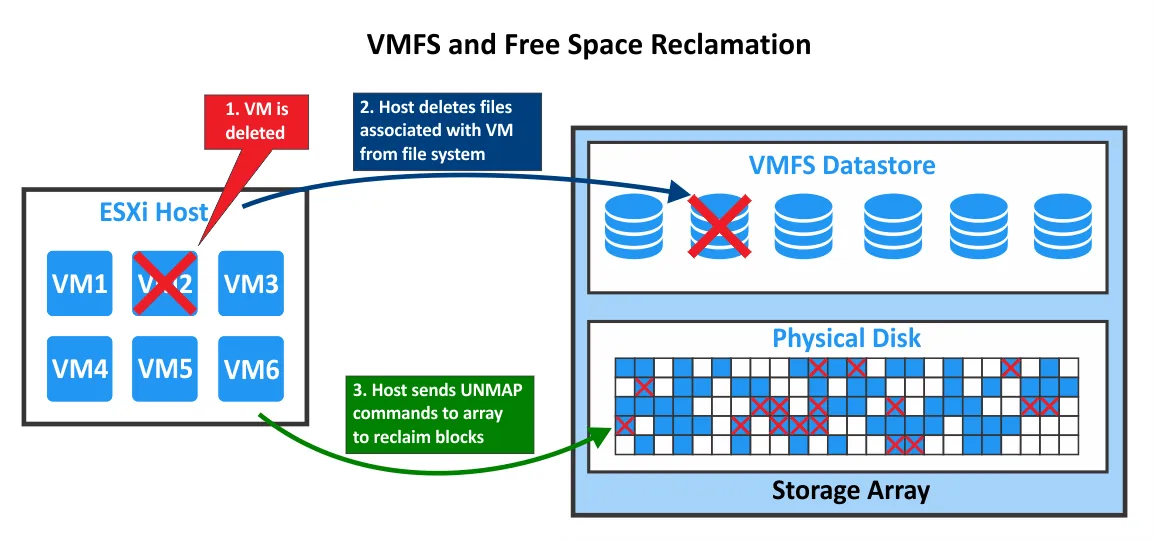

Free space reclamation

Automatic space reclamation (automatic SCSI UNMAP) from VMFS 6 and guest operating systems allows storage arrays to reclaim unmapped or deleted disk blocks from a VMFS datastore. In VMware vSphere 6.0 and VMFS 5, space reclamation was done manually with the esxcli storage vmfs unmap command.

Space reclamation allows you to fix the issue of when the underlying storage doesn’t know that a file was deleted in the file system, and the appropriate physical storage space (blocks on a disk) must be freed up. This feature is especially useful for thin provisioned disks. When a guest OS deletes files inside a thin virtual disk, the amount of used space on this disk is reduced, and the file system doesn’t use the corresponding blocks anymore. In this case, the file system tells the storage array that these blocks are now free, the storage array deallocates the selected blocks, and these blocks can be used to write data.

Let’s have a closer look at how data is deleted in storage when using virtualization and virtual machines. Imagine that there is a VM that has a guest OS using a virtual disk with a file system such as NTFS, ext4, or another file system. The thin provisioned virtual disk is stored on a datastore that has a VMFS file system. The VMFS file system is using the underlying partition and LUN located on a storage array.

- A file is deleted in the guest OS that operates with a file system (NTFS, for instance) on a virtual disk.

- The guest OS initiates UNMAP.

- The virtual disk on the VMFS datastore shrinks (the size of the virtual disk is reduced).

- ESXi initiates UNMAP to the physical storage array.

UNMAP is issued by ESXi with an attached VMFS datastore when a file is deleted or moved from the VMFS datastore (VMDK files, snapshot files, swap files, ISO images, etc.), when a partition is shrunk from a guest OS, and when a file size inside a virtual disk is reduced.

Automatic UNMAP for VMware VMFS 6 starting from ESXi 6.5 is asynchronous. Free space reclamation doesn’t happen immediately, but the space is eventually reclaimed without user interaction.

Asynchronous UNMAP has some advantages:

- Avoiding instant overloading of a hardware storage array because UNMAP requests are sent at a constant rate.

- Regions that must be freed up are batched and unmapped together.

- There is no negative impact on input/output performance and other operations.

How did UNMAP work in previous ESXi versions?

- ESXi 5.0 – UNMAP is automatic and synchronous

- ESXi 5.0 Update 1 – UNMAP is performed with vmkfstools in the command line interface (CLI)

- ESXi 5.5 and ESXi 6.0 – Manual UNMAP was improved when it is run in ESXCLI

- ESXi 6.0 – EnableBlockDelete allows VMFS to issue UNMAP automatically if VMDK virtual disk files are shrunk from in-guest UNMAP.

Snapshots and sparse virtual disks

You can take VM snapshots in VMware vSphere to save the current VM state and the state of virtual disks. When you create a VM snapshot, a virtual disk snapshot file is created on the VMFS datastore (a -delta.vmdk file). The snapshot file is called a delta-disk or child disk, which represents the difference between the current state of the VM and the previous state when the snapshot was taken

On the VMFS datastore, the delta disk is the sparse disk that uses the copy-on-write mechanism to save storage space when writing new data after creating a snapshot. There are two types of sparse format depending on the configuration of the underlying VMFS datastore: VMFSsparse and SEsparse.

- VMFSsparse is used for VMFS 5 and virtual disks smaller than 2 TB. This snapshot technique works on top of VMFS as the redo log is empty at the moment of starting and grows when the data is written after a snapshot is taken.

- SEsparse is used for virtual disks larger than 2 TB for VMFS 5 and for all virtual disks on VMFS 6. This format is based on the VMFSsparse format but has a set of enhancements such as support for space reclamation, which allows an ESXi hypervisor to UNMAP unused blocks after deleting data by a guest OS or deleting a snapshot file.

Note: In ESXi 6.7 with VMFS 6, UNMAP for SEsparse disks (snapshot disks for thin provisioned disks) is started automatically since there is 2 GB of dead space (data is deleted but not reclaimed) on the VMFS file system. If you delete multiple files from the guest OS, for example, four 512-MB files, then the asynchronous UNMAP is started. You can see live UNMAP update statistics in esxtop by pressing v to enable VM view then pressing f to select the field order, and pressing L to display UNMAP stats. The default value is 2 GB, though you can change it in the CLI. In ESXi 7.0 U3 the maximum granularity reported by VMFS is 2 GB.

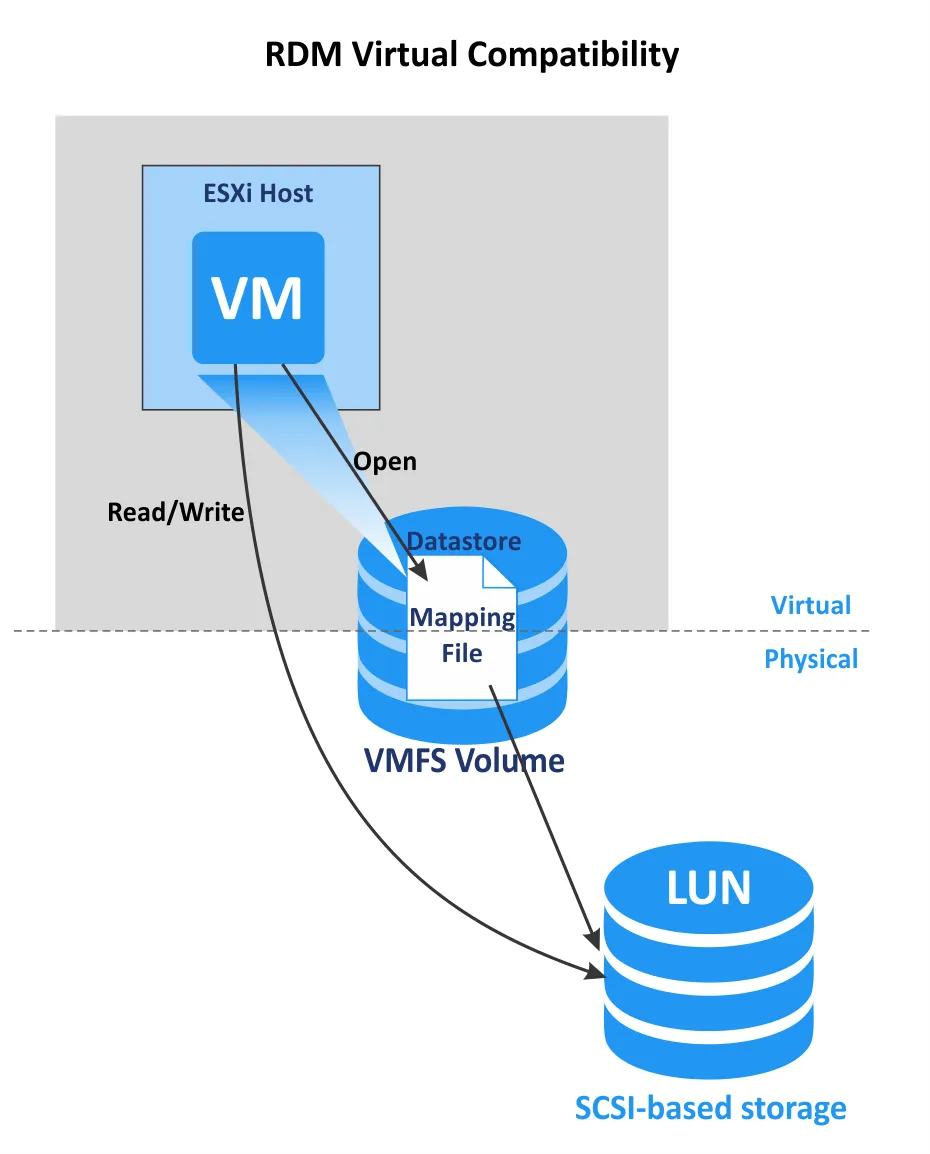

RAW Device Mapping

The integration of Raw Device Mapping (RDM) disks to the VMware VMFS structure provides you more flexibility when working with storage for VMs. There are two RDM compatibility modes in VMware vSphere.

- RDM disks in virtual compatibility mode. A VMDK mapping file is created on a VMFS datastore (*-rdmp.vmdk) to map a physical LUN on the storage array to a virtual machine. There are some specificities of mapping physical storage to a VM with this method.

Primary storage management operations such as Open and other SCSI commands are passed through a virtualization layer of an ESXi hypervisor, but Read and Write commands are processed directly to the storage device and bypass the virtualization layer.

This means that a VM can work with the mapped RDM SCSI disk only as with a storage device, but most vSphere features, such as snapshots, are available.

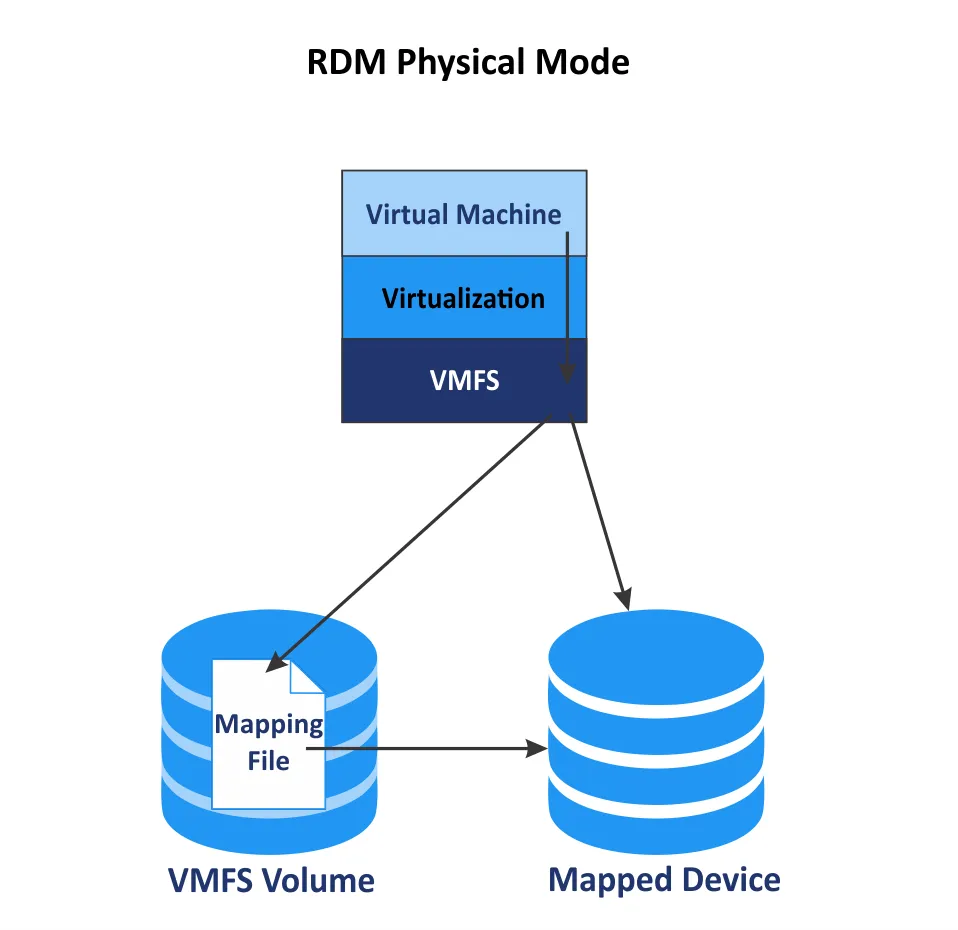

- RDM disks in physical compatibility mode. An ESXi host creates a mapping file on a VMFS datastore, but SCSI commands are processed to a LUN device directly, thus bypassing the hypervisor’s virtualization layer (except the LUN Report command). This is a less virtualized disk type. VMware snapshots are not supported.

Clustering features

- Clustering and concurrent access to the files on a datastore is another great feature of VMware VMFS. Unlike conventional file systems, VMware VMFS allows multiple servers to read and write data to files at any given time. A locking mechanism allows multiple ESXi hosts to access VM files concurrently without any data corruption. A lock is added to each VMDK file to prevent writing data to the open VMDK file by two VMs or by two ESXi hosts simultaneously. VMware supports two file locking mechanisms in VMFS for shared storage.

- Atomic test and set (ATS) only is used for storage devices that support T10 standard vStorage API for Array Integration (VAAI) specifications. This locking mechanism is also called hardware-assisted locking. The algorithm uses discrete locking per disk sector. By default, all new datastores formatted with VMFS 5 and VMFS 6 use ATS only if the underlying storage supports this locking mechanism and doesn’t use SCSI reservations. ATS is used for datastores created by using multiple extents and vCenter filters out non-ATS storage devices.

- ATS + SCSI reservations. If ATS fails, SCSI reservations are used. Unlike ATS, SCSI reservations lock the entire storage device when there is a need for metadata protection for the appropriate operation that modifies the metadata. After this operation is finished, VMFS releases the reservation to make it possible for other operations to continue. Datastores that were upgraded from VMFS 3 continue to use the ATS+SCSI mechanism.

VMware VMFS 6 supports sharing a VM virtual disk file (VMDK) with up to 32 ESXi hosts in vSphere.

Support for vMotion and Storage vMotion

VMware vMotion is a feature used for the live migration of VMs between ESXi hosts (CPU, RAM, and network components of VMs are migrated) without interrupting their operation. Storage vMotion is a feature to migrate VM files, including virtual disks, from one datastore to another without downtime even if the VM is in the running state. The VMFS file system is one of the main elements enabling live migration to work because more than one ESXi host reads/writes data from/to the files of the VM that is being migrated.

Support for HA and DRS

Distributed Resource Scheduler (DRS), High Availability (HA), and Fault Tolerance work on the basis of the file locking mechanism of VMFS, live migration, and clustering features. The automatic restart of a failed VM on another ESXi host when you enable HA is performed, and VM live migration is initiated to balance a cluster when you use DRS. You can use HA and DRS together.

Support for Storage DRS. There is support for using VMFS 5 and VMFS 6 in the same datastore cluster to migrate VM files between datastores. Use homogeneous storage devices for VMware vSphere Storage DRS.

Increasing VMFS volumes

You can increase the size of a VMFS datastore while VMs are running and use VM files located on that datastore. The first method is to increase the size of LUN used by your existing datastore. Increasing LUN occurs in the storage system (not in vSphere). Then you can extend a partition and increase the VMFS volume.

You can also increase the VMFS volume by aggregating multiple disks or LUNs together. VMFS extents are added to increase a VMFS volume in this case. Extended datastores that use multiple disks are also called spanned datastores. Homogenous storage devices must be used. For example, if the first storage device used by a datastore is 512n, then newly added storage devices must be 512n-block devices. This feature can help bypass the maximum LUN limit when the maximum supported datastore size is higher than the maximum LUN size.

Example: There is a 2-TB limit for a LUN, and you need to create a VM with a 3-TB virtual disk on a single VM datastore. Using two extents, each at 2 TB, allows you to resolve this issue. You must use the GPT partitioning scheme to create a partition and datastore larger than 2 TB.

Decreasing VMFS volumes

Reducing a VMFS volume is not supported. If you want to reduce the VMFS volume size, you need to migrate all files from the VMFS volume you want to reduce to a different VMFS datastore. Then you need to delete the datastore you want to reduce and create a new VMFS volume with a smaller size. When a new smaller datastore is ready on the created volume, migrate VM files to this new datastore.

VMFS Datastore upgrade

You can upgrade VMFS 3 to VMFS 5 directly without migrating VM files and recreating a new VMFS 5 datastore. There is support for the VMFS 3 to VMFS 5 upgrade on the fly, when VMs are running without the need to power off or migrate VMs. After upgrading, VMFS 5 retains all the characteristics of VMFS 3 that were used before. For example, the block size remains 64 KB instead of 1 MB, and MBR is preserved for partitions not larger than 2 TB.

However, Upgrading VMFS 5 and older versions of VMFS datastores to VMFS 6 directly is not supported. You need to migrate files from the datastore (that you are going to upgrade) to a safe location, delete the VMFS 5 datastore, create a new VMFS 6 datastore, and then copy the files back to the new VMFS 6 datastore.

If you upgrade ESXi to ESXi 6.5 or later, you can continue to use VMFS 3 and VMFS 5 datastores created before the ESXi upgrade. You cannot create VMFS 3 datastores on ESXi 6.5 and later ESXi versions.

Read the detailed VMFS 5 vs VMFS 6 comparison and learn how you can upgrade to the newest VMFS version. In some cases, you can mount VMFS in Linux.

Conclusion

VMware VMFS is a reliable, scalable, and optimized file system to store VM files. VMFS supports concurrent access by multiple ESXi hosts, thin provisioning, Raw Device Mapping, VM live migration, journaling, physical disks with Advanced Format including 512e and 4Kn, the GPT partitioning scheme, VM snapshots, free space reclamation, and other useful features. Due to the 1-MB block size, the latest VMFS versions are not prone to performance degradation caused by file fragmentation. Storing virtual machine files on VMFS datastores is the recommended way to store VMs in VMware vSphere.

No matter what file system you use to store virtual machines, you need to regularly back up your data to avoid data loss in case of failures, outages or other disruptions. Consider NAKIVO Backup & Replication, a solution that allows you to protect and swiftly recover vCenter-managed and standalone ESXi workloads.